In much of machine learning literature, the systems being modelled are instantaneous. Inputs -> outputs, with no notion of impact from past values.

In some systems, inputs from previous time-steps are relevant, e.g. because the system has internal states/storage. For example, in a hydrological model, you have inputs (rain, sun, wind), and outputs (streamflow), but you also have surface- and soil-storage at various depths. In a physically-based model, you might model those states as discrete buckets, with inflow, out-flow, evaporation, leakage, etc. all according to physical laws.

If you want to model streamflow in a purely empirical sense, e.g. with a neural network, you could just create an instantaneous model, and you'd get OK first-approximation results (and actually in land surface modelling, you could easily do better than a physically based model...). But you would be missing a lot of relevant information - stream flow in inherently lagged relative to rainfall, for instance.

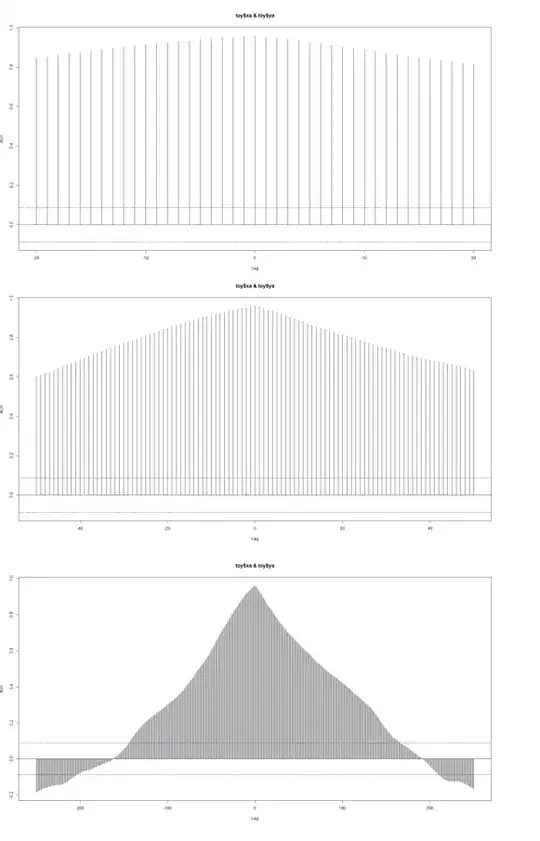

One way to get around this would be to include lagged variants of input features. e.g. if your data is hourly, then include rain over the last 2 days, rain over the last month. These inputs do improve model results in my experience, but it's basically a matter of experience and trial-and-error as to how you chose the appropriate lags. There are a huge array of possible lagged variables to include (straight lagged data, lagged averages, exponential moving windows, etc.; multiple variables, with interactions, and often with high covariances). I guess theoretically a grid-search for the best model is possible, but this would be prohibitively expensive.

I'm wondering a) if there is a reasonable, cheapish, and relatively objective way to select the best lags to include from the almost infinite choices, or b) if there is a better way of representing storage pools in a purely empirical machine-learning model.