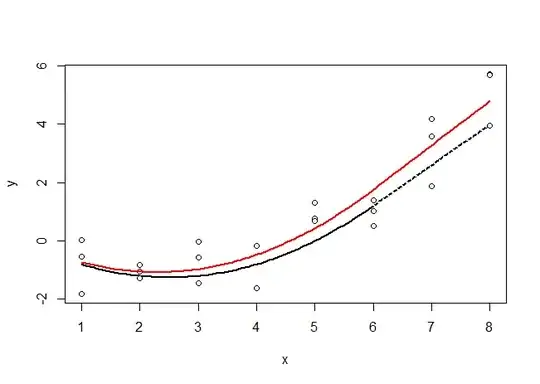

I am trying to combine two linear models (one linear-quadratic and one linear) into one unified model by means of piecewise regression. The tail of the lhs (linear-quadratic part) should continue to be the asymptote for the rhs (linear part). Here's a link! The piecewise function is,

$$y = ax + bx^2,\ x \lt x_t$$ and

$$y = cx + d,\ x \ge x_t$$

where $a, b, c, d$ and $x_t$ (a breakpoint) are parameters to be determined. This unified model should be compared with the linear-quadratic model for the whole range of $x$ by R.squared.adjusted as a measure of goodness of fit.

> y

[1] 1.00000 0.59000 0.15000 0.07800 0.02000 0.00470 0.00190 1.00000 0.56000 0.13000 0.02500 0.00510 0.00160 0.00091 1.00000 0.61000 0.12000

[18] 0.02600 0.00670 0.00085 0.00040

> x

[1] 0.00 5.53 12.92 16.61 20.30 23.07 24.92 0.00 5.53 12.92 16.61 20.30 23.07 24.92 0.00 5.53 12.92 16.61 20.30 23.07 24.92

I'm after continuity of the first derivative and to find the parameters, including determining the breakpoint. Since i want continuity at $x=x_t$, I have rewritten the piecewise function to

$$y = ax + bx^2,\ x \lt x_t$$ and

$$y = ax_t + bx_t^2 + k(x - x_t),\ x \ge x_t$$

where $k$ is a constant. So my attempt goes as follows (assuming I have derived $x_t$ theoretically):

I = ifelse(x < xt, 0, 1)*(x - xt)

x1 = ifelse(x < xt, x, xt)

mod = lm(y ~ x1 + I(x1^2) + I)

But the tail (asymptote) doesn't seem to be parallel to the linear part in the upper range...