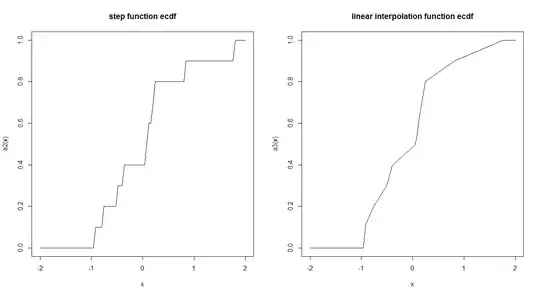

Empirical CDF functions are usually estimated by a step function. Is there a reason why this is done in such a way and not by using a linear interpolation? Does the step function has any interesting theoretical properties which make us prefer it?

Here is an example of the two:

ecdf2 <- function (x) {

x <- sort(x)

n <- length(x)

if (n < 1)

stop("'x' must have 1 or more non-missing values")

vals <- unique(x)

rval <- approxfun(vals, cumsum(tabulate(match(x, vals)))/n,

method = "linear", yleft = 0, yright = 1, f = 0, ties = "ordered")

class(rval) <- c("ecdf", class(rval))

assign("nobs", n, envir = environment(rval))

attr(rval, "call") <- sys.call()

rval

}

set.seed(2016-08-18)

a <- rnorm(10)

a2 <- ecdf(a)

a3 <- ecdf2(a)

par(mfrow = c(1,2))

curve(a2, -2,2, main = "step function ecdf")

curve(a3, -2,2, main = "linear interpolation function ecdf")