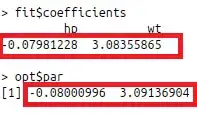

I am getting different results (close but not exact the same) from R GLM and manual solving logistic regression optimization. Could anyone tell me where is the problem?

BFGS does not converge? Numerical problem with finite precision?

Thanks

# logistic regression without intercept

fit=glm(factor(vs) ~ hp+wt-1, mtcars, family=binomial())

# manually write logistic loss and use BFGS to solve

x=as.matrix(mtcars[,c(4,6)])

y=ifelse(mtcars$vs==1,1,-1)

lossLogistic <- function(w){

L=log(1+exp(-y*(x %*% w)))

return(sum(L))

}

opt=optim(c(1,1),lossLogistic, method="BFGS")