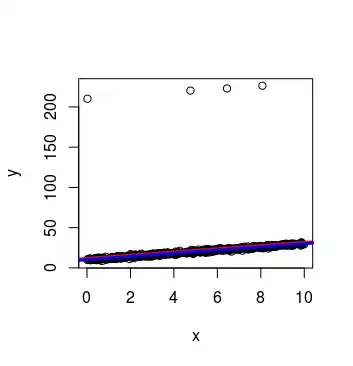

I'm trying to simulate a regression model with outliers to implement and understand more deeply the robust regression. I tried using a mixture between normal errors and uniforms.But as you can see, the estimates do not suffer large variations. I have also tried with a mixture of normal errors, but does not work. My aim is to illustrate the benefits of using M-estimates. Additionally, if you could help generate outliers with high leverage (to use S-estimates) would be very grateful

library(quantreg)

rm(list=ls())

set.seed(1234)

n<-500

y<-as.numeric(n)

x<-as.numeric(n)

error<-as.numeric(n)

for (i in 1:n){

x1 <- rnorm(1,0,1)

x2 <- runif(1,200,201)

u <- runif(1)

k <- as.integer(u > 0.99) #vector of 0?s and 1?s

error[i] <- (1-k)* x1 + k* x2 #the mixture

x[i]<-runif(1,0,10)

y[i]<-10+2*x[i]+error[i]

}

hist(error)

ls<-summary(lm(y~x))

l1<-summary(rq(y~x))

ls$coef[,1]

l1$coef[,1]

plot(y~x)

abline(a=ls$coef[1,1],b=ls$coef[2,1], col="red", lwd=3)

abline(a=l1$coef[1,1],b=l1$coef[2,1], col="blue", lwd=3)