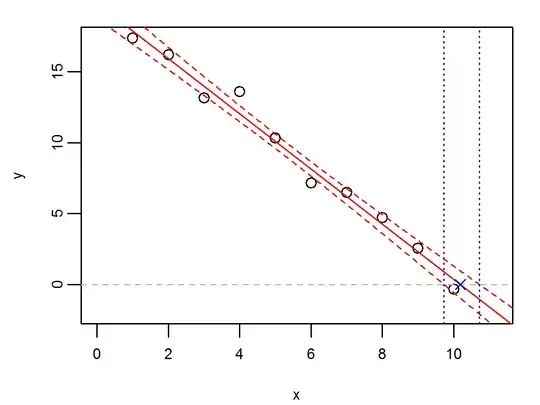

Since standard error of a linear regression is usually given for the response variable, I'm wondering how to obtain confidence intervals in the other direction - e.g. for an x-intercept. I'm able to visualize what it might be, but I'm sure there must be a straightforward way to do this. Below is an example in R of how to visualize this:

set.seed(1)

x <- 1:10

a <- 20

b <- -2

y <- a + b*x + rnorm(length(x), mean=0, sd=1)

fit <- lm(y ~ x)

XINT <- -coef(fit)[1]/coef(fit)[2]

plot(y ~ x, xlim=c(0, XINT*1.1), ylim=c(-2,max(y)))

abline(h=0, lty=2, col=8); abline(fit, col=2)

points(XINT, 0, col=4, pch=4)

newdat <- data.frame(x=seq(-2,12,len=1000))

# CI

pred <- predict(fit, newdata=newdat, se.fit = TRUE)

newdat$yplus <-pred$fit + 1.96*pred$se.fit

newdat$yminus <-pred$fit - 1.96*pred$se.fit

lines(yplus ~ x, newdat, col=2, lty=2)

lines(yminus ~ x, newdat, col=2, lty=2)

# approximate CI of XINT

lwr <- newdat$x[which.min((newdat$yminus-0)^2)]

upr <- newdat$x[which.min((newdat$yplus-0)^2)]

abline(v=c(lwr, upr), lty=3, col=4)