I'm trying to model a logistic regression in R between two simple variables:

- Rating: An independent ordered categorical one, ranging from 1 to 99 (1, 2, 3, 4, 5, 99 in particular, 1 is the best)

- Result: A dependent binary variable (0-1, not accepted/accepted)

The formula I use is

glm(formula = result_dummy ~ best_rating, family = binomial(link = "logit"),

data = cd[1:10000, ])

result_dummy is a 0/1 numerical variable (original result column was a factor) and scaled_rating is the rating column after use the R scale function.

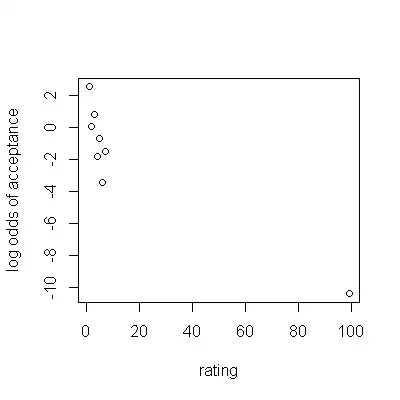

My thought here was to find a negative correlation (low rating -> more probability to accept) but the more samples I use the more odd results I find:

10 samples:

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) 0.6484 0.7413 0.875 0.382

scaled_rating -5.9403 5.8179 -1.021 0.307

100 samples:

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) -0.09593 0.27492 -0.349 0.72714

scaled_rating -5.06251 1.76645 -2.866 0.00416 **

1000 samples:

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) -0.03539 0.09335 -0.379 0.705

scaled_rating -6.81964 0.62003 -10.999 <2e-16 ***

10000 samples:

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) 0.2489 0.0291 8.553 <2e-16 ***

scaled_rating -7.2319 0.2004 -36.094 <2e-16 ***

Notes: I know that after the fit I should check residual plot, normality assumptions, etc. etc. but nonetheless I find really strange this behaviour.

I also have similar results using simply the rating column instead of the scaled one.

Edit:

The rating variable is not really an ordinal one, so as pointed out by @Scortchi maybe it would be better to treat it as a categorical one.

I have surely better results and model stability, obviously the model is a simple one and the residual error would be always high (because some variables as not been included in the model).

Indeed, including the frequency table as requested shows that the rating variable IS NOT sufficient for having a clear separation between the result outcome.

0 1

1 2881 42564

2 13878 129292

3 36839 179500

4 43511 97148

5 37330 47002

6 31801 21228

7 19096 6034

99 10008 3