Suppose we want to solve following optimization problem (it is a PCA problem in this post)

$$ \underset{\mathbf w}{\text{maximize}}~~ \mathbf w^\top \mathbf{Cw} \\ \text{s.t.}~~~~~~ \mathbf w^\top \mathbf w=1 $$

As mentioned in the linked post, using the Lagrange multiplier, we can change the problem into

$$ {\text{minimize}} ~~ \mathbf w^\top \mathbf{Cw}-\lambda(\mathbf w^\top \mathbf w-1)) $$ Differentiating, we obtain $\mathbf{Cw}-\lambda\mathbf w=0$, which is the eigenvector equation. Problem solved and $\lambda$ is the largest eigenvalue.

I am trying to do a numerical example here to understand more about how Lagrange multiplier changed the problem, but not sure my validation process is correct.

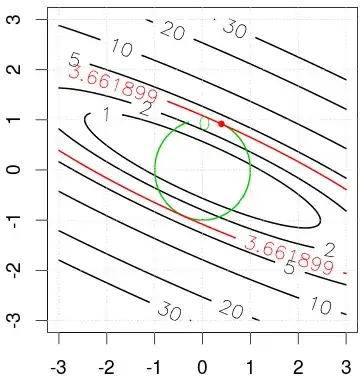

I experimented with the iris data's co-variance matrix (see code). The figure shows geometric solution to the problem, where the black curve are the contours of the objective function, and green curve is the constraints. The red curve shows the optimal solution that can maximize the objective and satisfy constraints.

In my code, I am trying to use optimx to minimize a unconstrained objective function. I am replacing the $\lambda$ with the solution from eigen decomposition.

X=iris[,c(1,3)]

X$Sepal.Length=X$Sepal.Length-mean(X$Sepal.Length)

X$Petal.Length=X$Petal.Length-mean(X$Petal.Length)

C=cov(X)

r=eigen(C)

obj_fun<-function(x){

w=as.matrix(c(x[1],x[2]),ncol=1)

lambda=r$values[1]

v=t(w) %*% C %*% w + lambda *(t(w) %*% w -1)

return(as.numeric(v))

}

gr<-function(w) {

lambda=r$values[1]

v=2* C %*% w + 2*lambda* w

return(v)

}

res=optimx::optimx(c(1,2), obj_fun,gr, method="BFGS")

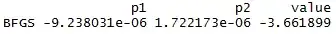

I am getting following results, where the objective function is negative of the optimal value to the graphical solution. And two parameters p1 and p2 are 0.

My question is that is such validation method right? i.e., can we replace $\lambda$ with largest eigen value and minimize the objective function $\mathbf w^\top \mathbf{Cw}-\lambda(\mathbf w^\top \mathbf w-1))$ to get a solution?