This question results from the discussion following a previous question: What is the connection between partial least squares, reduced rank regression, and principal component regression?

For principal component analysis, a commonly used probabilistic model is $$\mathbf x = \sqrt{\lambda} \mathbf{w} z + \boldsymbol \epsilon \in \mathbb R^p,$$ where $z\sim \mathcal N(0,1)$, $\mathbf{w}\in S^{p-1}$, $\lambda > 0$, and $\boldsymbol\epsilon \sim \mathcal N(0,\mathbf{I}_p)$. Then the population covariance of $\mathbf{x}$ is $\lambda \mathbf{w}\mathbf{w}^T + \mathbf{I}_p$, i.e., $$\mathbf{x}\sim \mathcal N(0,\lambda \mathbf{w}\mathbf{w}^T + \mathbf{I}_p).$$ The goal is to estimate $\mathbf{w}$. This is known as the spiked covariance model, which is frequently used in the PCA literature. The problem of estimating the true $\mathbf{w}$ can be solved by maximizing $\operatorname{Var} (\mathbf{Xw})$ over $\mathbf{w}$ on the unit sphere.

As pointed out in the answer to the previous question by @amoeba, reduced rank regression, partial least squares, and canonical correlation analysis have closely related formulations,

\begin{align} \mathrm{PCA:}&\quad \operatorname{Var}(\mathbf{Xw}),\\ \mathrm{RRR:}&\quad \phantom{\operatorname{Var}(\mathbf {Xw})\cdot{}}\operatorname{Corr}^2(\mathbf{Xw},\mathbf {Yv})\cdot\operatorname{Var}(\mathbf{Yv}),\\ \mathrm{PLS:}&\quad \operatorname{Var}(\mathbf{Xw})\cdot\operatorname{Corr}^2(\mathbf{Xw},\mathbf {Yv})\cdot\operatorname{Var}(\mathbf {Yv}) = \operatorname{Cov}^2(\mathbf{Xw},\mathbf {Yv}),\\ \mathrm{CCA:}&\quad \phantom{\operatorname{Var}(\mathbf {Xw})\cdot {}}\operatorname{Corr}^2(\mathbf {Xw},\mathbf {Yv}). \end{align}

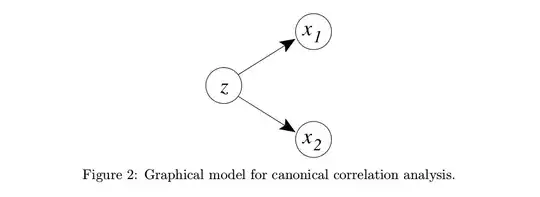

The question is, what are the probabilistic models behind RRR, PLS, and CCA? In particular, I am thinking about $$(\mathbf{x}^T, \mathbf{y}^T)^T \sim \mathcal N(0, \mathbf{\Sigma}).$$ How does $\mathbf{\Sigma}$ depend on $\mathbf{w}$ and $\mathbf{v}$ in RRR, PLS, and CCA? Moreover, is there a unified probabilistic model (like the spiked covariance model for PCA) for them?