These are three different methods, and none of them can be seen as a special case of another.

Formally, if $\mathbf X$ and $\mathbf Y$ are centered predictor ($n \times p$) and response ($n\times q$) datasets and if we look for the first pair of axes, $\mathbf w \in \mathbb R^p$ for $\mathbf X$ and $\mathbf v \in \mathbb R^q$ for $\mathbf Y$, then these methods maximize the following quantities:

\begin{align}

\mathrm{PCA:}&\quad \operatorname{Var}(\mathbf{Xw}) \\

\mathrm{RRR:}&\quad \phantom{\operatorname{Var}(\mathbf {Xw})\cdot{}}\operatorname{Corr}^2(\mathbf{Xw},\mathbf {Yv})\cdot\operatorname{Var}(\mathbf{Yv}) \\

\mathrm{PLS:}&\quad \operatorname{Var}(\mathbf{Xw})\cdot\operatorname{Corr}^2(\mathbf{Xw},\mathbf {Yv})\cdot\operatorname{Var}(\mathbf {Yv}) = \operatorname{Cov}^2(\mathbf{Xw},\mathbf {Yv})\\

\mathrm{CCA:}&\quad \phantom{\operatorname{Var}(\mathbf {Xw})\cdot {}}\operatorname{Corr}^2(\mathbf {Xw},\mathbf {Yv})

\end{align}

(I added canonical correlation analysis (CCA) to this list.)

I suspect that the confusion might be because in SAS all three methods seem to be implemented via the same function PROC PLS with different parameters. So it might seem that all three methods are special cases of PLS because that's how the SAS function is named. This is, however, just an unfortunate naming. In reality, PLS, RRR, and PCR are three different methods that just happen to be implemented in SAS in one function that for some reason is called PLS.

Both tutorials that you linked to are actually very clear about that. Page 6 of the presentation tutorial states objectives of all three methods and does not say PLS "becomes" RRR or PCR, contrary to what you claimed in your question. Similarly, the SAS documentation explains that three methods are different, giving formulas and intuition:

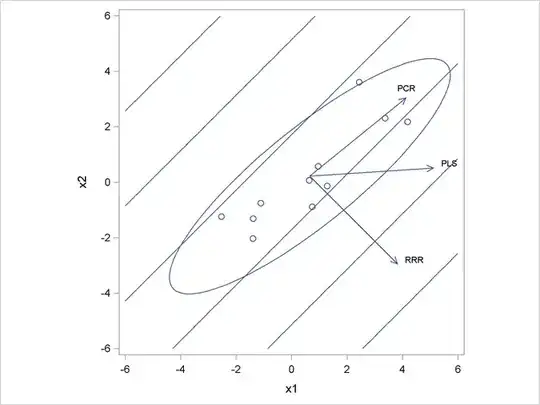

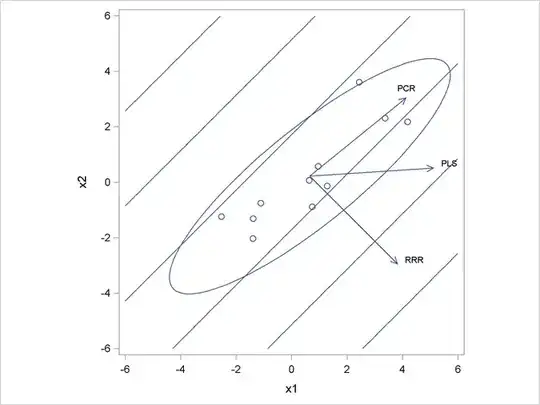

[P]rincipal components regression selects factors that explain as much predictor variation as possible, reduced rank regression selects factors that explain as much response variation as possible, and partial least squares balances the two objectives, seeking for factors that explain both response and predictor variation.

There is even a figure in the SAS documentation showing a nice toy example where three methods give different solutions. In this toy example there are two predictors $x_1$ and $x_2$ and one response variable $y$. The direction in $X$ that is most correlated with $y$ happens to be orthogonal to the direction of maximal variance in $X$. Hence PC1 is orthogonal to the first RRR axis, and PLS axis is somewhere in between.

One can add a ridge penalty to the RRR lost function obtaining ridge reduced-rank regression, or RRRR. This will pull the regression axis towards the PC1 direction, somewhat similar to what PLS is doing. However, the cost function for RRRR cannot be written in a PLS form, so they remain different.

Note that when there is only one predictor variable $y$, CCA = RRR = usual regression.