I am using linear regression to estimate values that in reality are always non-negative. The predictor variables are also non-negative. For instance, regressing the number of years of education and age to predict salary. All variables in this case are always non-negative.

Due to the negative intercept, my model (determined with OLS) results in some negative predictions (when the value of the predictor variable is low with respect to the range of all values).

This topic has already been covered here, and I am also aware that forcing the intercept at 0 is discouraged, so it seems that I have to accept this model as the one I have to use. However, my question here is about the accepted norms and rules when evaluating such model. Are there any particular rules here? Specifically:

- If I get a negative estimate can I just round it to 0?

- If the observed value is 100, and the predicted value is -300, and I know that the minimum possible value is 0, is the error 400 or 100? For instance, when calculating the ME and RMSE.

If it is relevant to the discussion: I have used both simple linear regression and multiple linear regression. Both result in several negative values.

Edit:

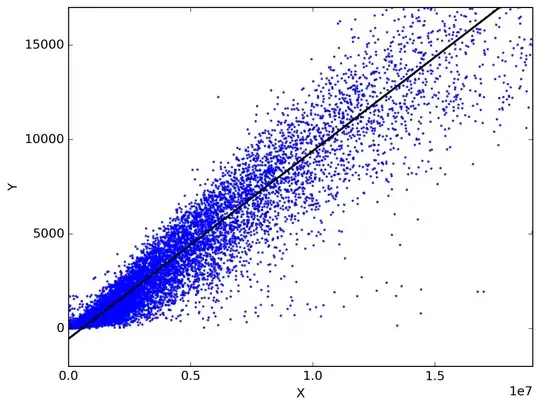

Here is the example of the samples with the fit:

The coefficients of the linear regression are 0.0010(x) and -540 (intercept).

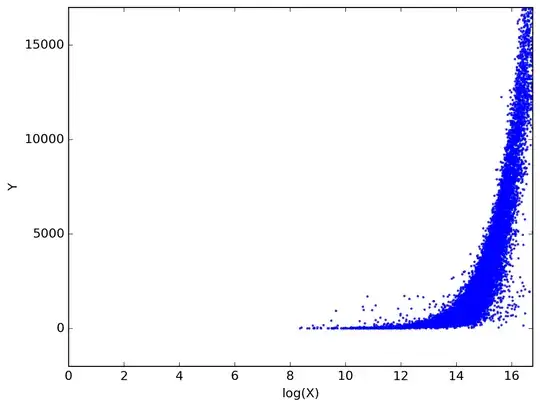

Here is what happens when I use log for the X:

Is linear regression suitable here?