I'm using linear regression to predict a price which is obviously positive. I have only one feature which is gross_area. I standardized it (z-score) I got this kind of value:

array([[ 1. , -0.48311432],

[ 1. , 0.68052306],

[ 1. , 2.1426852 ],

[ 1. , -1.17398593],

[ 1. , -0.16265712]])

Where the 1 is the constant for the intercept term. I predict the parameters(predictors) and I got this:

array([[ 31780004.85045217],

[ 27347542.4693376 ]])

Where the first cell is the intercept term and the second cell correspond to the parameter found for my feature gross_area.

My problem is the following, when I take for example the fourth line and I compute the matrix multiplication XB to get my prediction, I got this:

In [797]: np.dot(training[4], theta)

Out[797]: array([-325625.35640697])

Which is totally wrong since I cannot have negative value for my dependent variable. It seems like because of my normalization where I got negative value for my feature, I ended up with a negative predicted value for some tuple. How can is it possible and how can I fix this ? Thank you.

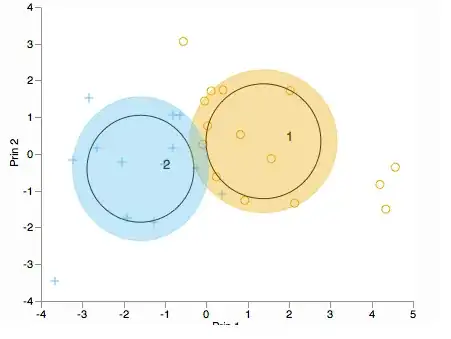

This is what I have predict graphically:

with y=price , x =gross area