I am trying to convey the results of a Bayesian statistical analysis to an audience uneducated with Bayesian statistics but familiar with the interpretation of p-values (verbal, non-publication setting). The difficulty lies not in interpreting the results, or explaining why a Bayesian analysis was chosen, but in summarizing those results without the need to fully explain the Bayesian process - I am unsure what statements I can accurately make that will best (and most accurately) convey the Bayesian interpretation.

As an example, consider the Bayesian style t-test as described by Kruschke's article Bayesian estimation supersedes the t-test with the following simulated data and a diffuse/uninformative prior. In R:

set.seed(1)

y1 = rt(50, 5, 5)

y2 = rt(50, 5, 6)

library(BEST)

best = BESTmcmc(y1, y2, numberSavedSteps=10000)

plot(best)

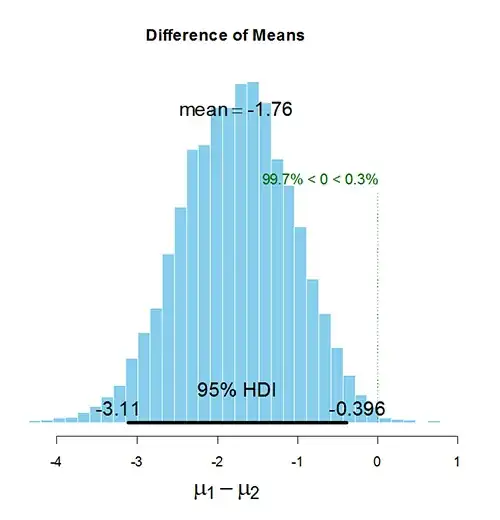

A discrete ROPE value of 0 falls outside the 95% HDI of $\mu$1-$\mu$2, suggesting the group means can be considered credibly different.

- How can this be quickly (and accurately) summarized to an audience uneducated in Bayesian statistics without fully explaining Bayesian statistics and its terminology (for example in terms of probability rather than ROPE, HDI, posterior, etc...)?

- Is it accurate to extrapolate and report probabilities based upon the credible interval(s)? More generally, is it correct to state that given a discrete ROPE, if X% of the credible interval falls to one side of that ROPE, then there is an X% probability (given the data) that that hypothesis (eg $\mu$1 is less/greater than $\mu$2) is correct? Using the values from the above example, this would read 'give this data, there is a 99.7% probability that the $\mu$1 is smaller than $\mu$2'.