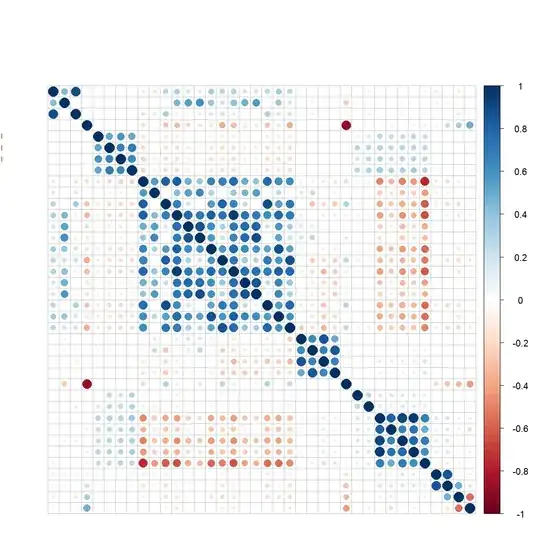

I am working on a problem of COPD exacerbation likelihood prediction, I have a total 62 attributes out of which 38 variables/attributes are of numeric/continuous type and remaining are either binary or ternary. I want to apply random forest to it but I am a beginner in ML and so far I have observed that in my data several of continuous variables are heavily correlated.

I want to know if I can use this facts to boost up accuracy of random forest. I am using R for my computation and below attached is the corrplot of continuous variables.

Asked

Active

Viewed 675 times

1

firefly

- 9

- 1

-

If you read about random forests, I think that one of it's claimed advantages is in the way it deals with correlated variables. Since any tree in the forest will only contain so many of the variables it allows one to understand among correlated variables , which are influencing the splits most heavily. It should also allow each variable to have it's say. – meh Jan 16 '16 at 15:40

-

@aginensky thanks for your reply... but please have a look here http://stats.stackexchange.com/questions/141619/wont-highly-correlated-variables-in-random-forest-distort-accuracy-and-feature – firefly Jan 16 '16 at 15:42

-

@aginensky also I want to know if I can do any pre processing to use this fact as an advantage that I have a large no. of numeric attributes and many of them show heavy correlation to boost up my accuracy. My approach is to build an ensemble of random forest and logistic regression and then use this ensemble to give a final output...please help me with this. thanks – firefly Jan 16 '16 at 15:43

-

(1) you might need to expand your question - why do you think the fact that the predictors are correlated can be used to boost accuracy? Is this something you've read? (2) the stackexchange post you reference is dealing with a very difference issue - one of variable selection. (3) your final approach is meant to be `rulefit` type implementation? – charles Jan 17 '16 at 00:21

-

I had a look at the link. I think it is a more complete (better?) version of what I said. As to your second question- have you considered boosting with something like a stump tree ? My thinking is that the issue with highly correlated variables would be that one winds up doing too much of 'noise modeling'. The boosting approach might eliminate that issue. – meh Jan 17 '16 at 15:01

-

A broad thumb rule: If you have more than ~500 samples and 62 attributes, I would not do too much variable selection and just let RF take care of it. If you only6 have ~50 samples, some modest variable selection may help. If the OOB explained variance is very low(regression, <45%), or if the OOB error is very high (binary classification >40%) due to intrinsic variance, then don't try boosting, but rather going more robust with modest var.selection and lowering tree sample.size. In reverse case of explained variance >80% you could try e.g. the xgboost package or any boost hack which works. – Soren Havelund Welling Jan 18 '16 at 09:30