When training XGboost model I observe the following outputs:

[10] train-rmspe:0.360292 eval-rmspe:0.843193

[11] train-rmspe:0.358901 eval-rmspe:0.848542

[12] train-rmspe:0.355327 eval-rmspe:0.878116

[13] train-rmspe:0.349120 eval-rmspe:0.880048

[14] train-rmspe:0.343729 eval-rmspe:0.886429

[15] train-rmspe:0.337795 eval-rmspe:0.887312

[16] train-rmspe:0.331385 eval-rmspe:0.892312

[17] train-rmspe:0.329000 eval-rmspe:0.892327

[18] train-rmspe:0.325391 eval-rmspe:0.892305

[19] train-rmspe:0.323480 eval-rmspe:0.894754

[20] train-rmspe:0.321171 eval-rmspe:0.892071

[21] train-rmspe:0.320194 eval-rmspe:0.893531

[22] train-rmspe:0.318526 eval-rmspe:0.892274

[23] train-rmspe:0.315825 eval-rmspe:0.903235

[24] train-rmspe:0.315040 eval-rmspe:0.901118

[25] train-rmspe:0.313372 eval-rmspe:0.905540

[26] train-rmspe:0.312313 eval-rmspe:0.905291

[27] train-rmspe:0.311462 eval-rmspe:0.908073

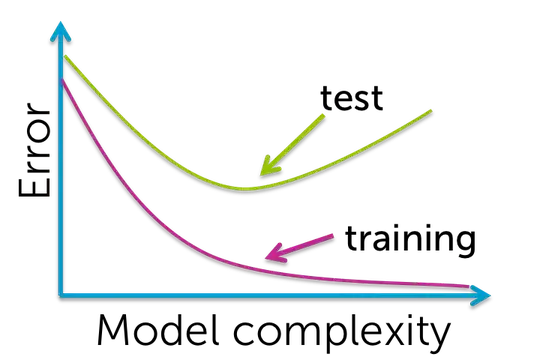

I don't understand why the error on a training set is decreasing, while the error on the validation set is increasing. What is the meaning of this? It happens with all the data sub-sets...