I want to use k-means to cluster my data. I have broken one column into 4 dummy variables and I have normalized all of the data to mean=0 and sd=1. Will k-means work with these dummy variables?

I have run the k-means in R and the results look pretty good, but are much more dependent on the value of these dummy variables than the rest of the data. My 'between_SS / total_ss' = 58%

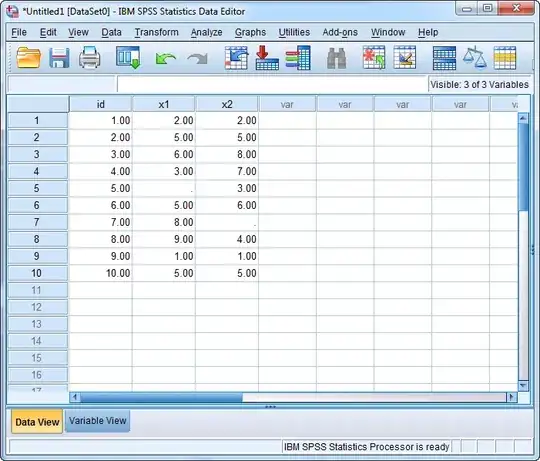

Data Sample:

num_months, sales, dummy_a, dummy_b, dummy_c, dummy_d

10, 102.33, 1, 0, 0, 0

5.7, 57.5, 0, 0, 0, 1

21.3, 152.88, 0, 1, 0, 0

Code:

library("ggplot2")

library("scatterplot3d")

mydata <- read.csv("data.csv", stringsAsFactors = FALSE)

data <- scale(data)

km <- kmeans(data, 4) #Break into 4 clusters

##...combine the dummy variables into 1 field so I can use it as the 3rd dimension to graph it

results$color[results$cluster1==1] <- "red"

results$color[results$cluster2==1] <- "blue"

results$color[results$cluster3==1] <- "green"

results$color[results$cluster4==1] <- "orange"

with(results, {

s3d <- scatterplot3d(num_months, sales, dummy_combined,

color=color, pch=19)

s3d.coords <- s3d$xyz.convert(num_months, sales, dummy_combined)

})

edit: Here is some code for my comment below. It uses kmeans to cluster 3-dimensional data, 2 of which are binary data. It looks like it does a fine job clustering.

seed(2015)

v1 <- c(runif(500, min = -10, -5), runif(500, min = 5, 10))

v2 <- round(runif(1000, min=0, max=1))

v3 <- round(runif(1000, min=0, max=1))

v1 <- scale(v1)

v2 <- scale(v2)

v3 <- scale(v3)

mat <- matrix(c(v1,v2),nrow=length(v1))

k <- kmeans(mat,4)

plot3d(v1, v2, v3, size=7, col = k$cluster)