I was reading the paper ImageNet Classification with Deep Convolutional Neural Networks and in section 3 were they explain the architecture of their Convolutional Neural Network they explain how they preferred using:

non-saturating nonlinearity $f(x) = max(0, x). $

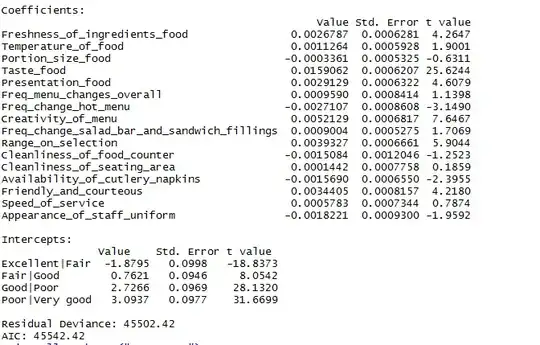

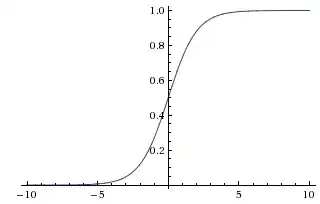

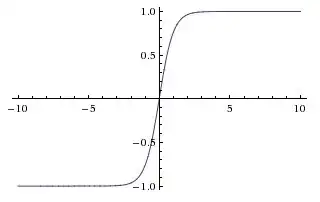

because it was faster to train. In that paper they seem to refer to saturating nonlinearities as the more traditional functions used in CNNs, the sigmoid and the hyperbolic tangent functions (i.e. $f(x) = tanh(x)$ and $f(x) = \frac{1}{1 + e^{-x}} = (1 + e^{-x})^{-1}$ as saturating).

Why do they refer to these functions as "saturating" or "non-saturating"? In what sense are these function "saturating" or "non-saturating"? What do those terms mean in the context of convolutional neural networks? Are they used in other areas of machine learning (and statistics)?