I would like to know how to transform negative values to Log(), since I have heteroskedastic data. I read that it works with the formula Log(x+1) but this doesn't work with my database and I continue getting NaNs as result. E.g. I get this Warning message (I didn't put my complete database because I think with one of my negative values is enough to show an example):

> log(-1.27+1)

[1] NaN

Warning message:

In log(-1.27 + 1) : NaNs produced

>

Thanks in advance

UPDATE:

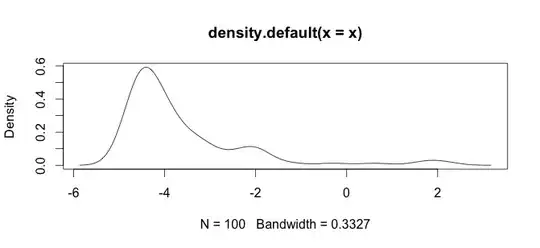

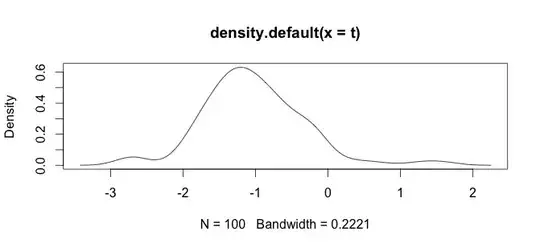

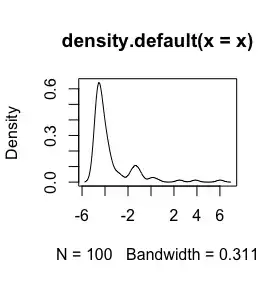

Here is an histogram of my data. I'm working with palaeontological time series of chemical measurements, E.g the difference between variables like Ca and Zn is too big, then I need some type of data standardization, that is why I'm testing the log() function.

This is my raw data