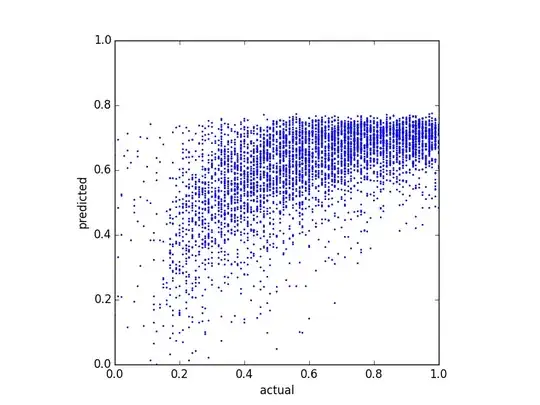

I try to predict a balance score and tried several different regression methods. One thing I noticed is that the predicted values seem to have some kind of upper bound. That is, the actual balance is in $[0.0, 1.0)$, but my predictions top at about $0.8$. The following plot shows the actual vs. the predicted balance (predicted with linear regression):

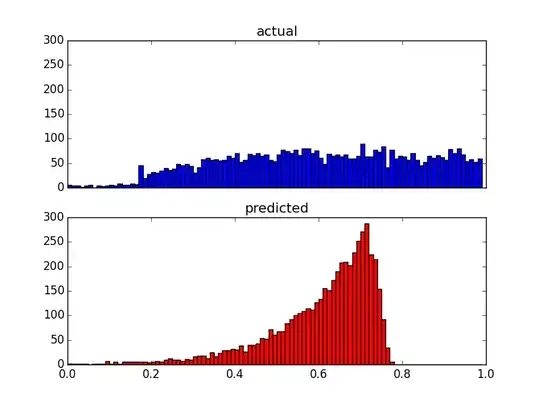

And here are two distribution plots of the same data:

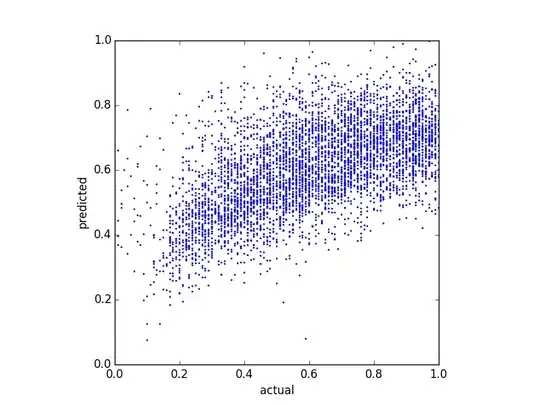

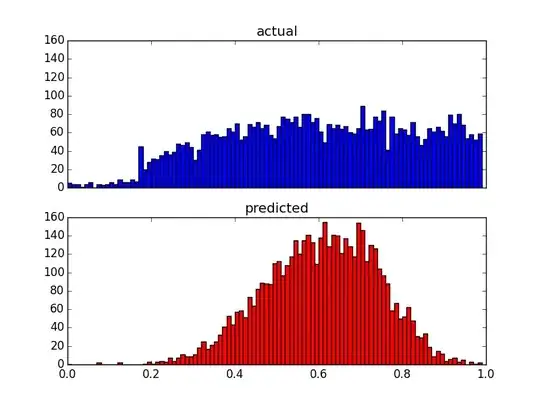

Since my predictors are very skewed (user data with power law distribution), I applied a Box-Cox transformation, which changes the results to the following:

Although it changes the distribution of the predictions, there is still that upper bound. So my questions are:

- What are possible reasons for such upper bounds in prediction results?

- How can I fix the predictions to correspond to the distribution of the actual values?

Bonus: Since the distribution after the Box-Cox transformation seems to follow the distributions of the transformed predictors, is it possible that this is directly linked? If so, is there a transformation I can apply, to fit the distribution to the actual values?

Edit: I used a simple linear regression with 5 predictors.