Certainly the mean plus one sd can exceed the largest observation.

Consider the sample 1, 5, 5, 5 -

it has mean 4 and standard deviation 2, so the mean + sd is 6, one more than the sample maximum. Here's the calculation in R:

> x=c(1,5,5,5)

> mean(x)+sd(x)

[1] 6

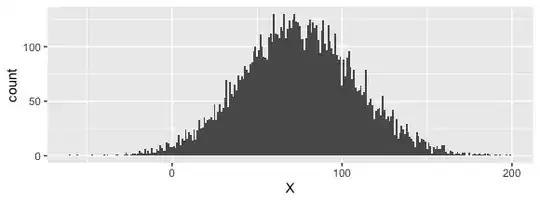

It's a common occurrence. It tends to happen when there's a bunch of high values and a tail off to the left (i.e. when there's strong left skewness and a peak near the maximum).

--

The same possibility applies to probability distributions, not just samples - the population mean plus the population sd can easily exceed the maximum possible value.

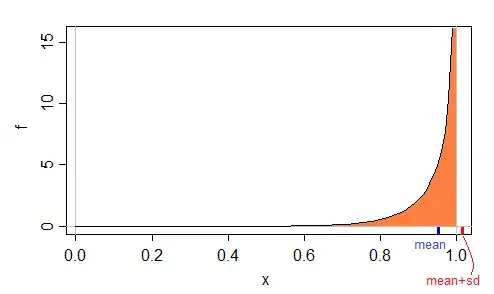

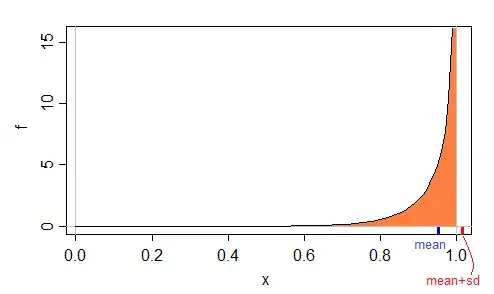

Here's an example of a $\text{beta}(10,\frac{1}{2})$ density, which has a maximum possible value of 1:

In this case, we can look at the Wikipedia page for the beta distribution, which states that the mean is:

$\operatorname{E}[X] = \frac{\alpha}{\alpha+\beta}\!$

and the variance is:

$\operatorname{var}[X] = \frac{\alpha\beta}{(\alpha+\beta)^2(\alpha+\beta+1)}\!$

(Though we needn't rely on Wikipedia, since they're pretty easy to derive.)

So for $\alpha=10$ and $\beta=\frac{1}{2}$ we have mean$\approx 0.9523$ and sd$\approx 0.0628$, so mean+sd$\approx 1.0152$, more than the possible maximum of 1.

That is, it's easily possible to have a value of mean+sd large enough that it cannot be observed as a data value.

--

For any situation where the mode was at the maximum, the Pearson mode skewness need only be $<\,-1$ for mean+sd to exceed the maximum, an easily satisfied condition.

--

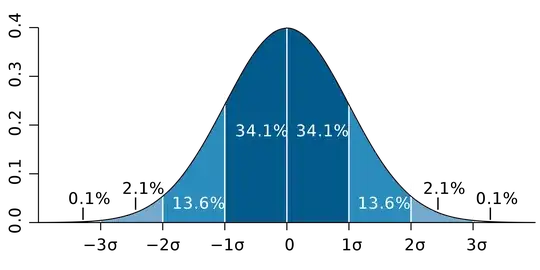

A closely related issue is often seen with confidence intervals for a binomial proportion, where a commonly used interval, the normal approximation interval can produce limits outside $[0,1]$.

For example, consider a 95.4% normal approximation interval for the population proportion of successes in Bernoulli trials (outcomes are 1 or 0 representing success and failure events respectively), where 3 of 4 observations are "$1$" and one observation is "$0$".

Then the upper limit for the interval is $\hat p + 2 \times \sqrt{\frac{1}{4}\hat p \left(1 - \hat p \right)} = \hat p + \sqrt{\hat p (1 - \hat p )} = 0.75 + 0.433=1.183$

This is just the sample mean + the usual estimate of the sd for the binomial ... and produces an impossible value.

The usual sample sd for 0,1,1,1 is 0.5 rather than 0.433 (they differ because the binomial ML estimate of the standard deviation $\hat p(1-\hat p)$ corresponds to dividing the variance by $n$ rather than $n-1$). But it makes no difference - in either case, mean + sd exceeds the largest possible proportion.

This fact - that a normal approximation interval for the binomial can produce "impossible values" is often noted in books and papers. However, you're not dealing with binomial data. Nevertheless the problem - that mean + some number of standard deviations is not a possible value - is analogous.

--

In your case, the unusual "0" value in your sample is making the sd large more than it pulls the mean down, which is why the mean+sd is high.

--

(The question would instead be - by what reasoning would it be impossible? -- because without knowing why anyone would think there's a problem at all, what do we address?)

Logically of course, one demonstrates it's possible by giving an example where it happens. You've done that already. In the absence of a stated reason why it should be otherwise, what are you to do?

If an example isn't sufficient, what proof would be acceptable?

There's really no point simply pointing to a statement in a book, since any book may make a statement in error - I see them all the time. One must rely on direct demonstration that it's possible, either a proof in algebra (one could be constructed from the beta example above for example*) or by numerical example (which you have already given), which anyone can examine the truth of for themselves.

* whuber gives the precise conditions for the beta case in comments.