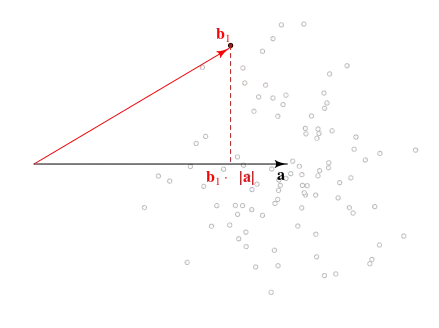

Suppose $\vec{a}$ is an unknown $p$-vector, and one observes $\vec{b} \sim \mathcal{N}\left(\vec{a}, I\right)$. I would like to compute confidence intervals on the random quantity $\vec{b}^{\top} \vec{a}$, based only on the observed $\vec{b}$ and known parameter $p$. That is, for a given $\alpha \in (0,1)$, find $c(\vec{b}, p, \alpha)$ such that $Pr\left(\vec{b}^{\top}\vec{a} \le c(\vec{b},p,\alpha)\right) = \alpha$.

This is a weird question because the randomness that contributes to the confidence intervals also affects $\vec{b}$. The straightforward approach is to claim that, conditional on $\vec{b}$, $\vec{a} \sim\mathcal{N}\left(\vec{b}, I\right)$, thus $\vec{b}^{\top}\vec{a} \sim\mathcal{N}\left(\vec{b}^{\top}\vec{b}, {\vec{b}^{\top}\vec{b}}I\right)$, but I do not think this will give a proper CI because $\vec{b}^{\top}\vec{b}$ is biased for $\vec{a}^{\top}\vec{a}$, which is the expected value of $\vec{b}^{\top}\vec{a}$. ($\vec{b}^{\top}\vec{b}$ is, up to scaling, a non-central chi-square RV, with non-centrality parameter depending on $\vec{a}^{\top}\vec{a}$; its expected value is not $\vec{a}^{\top}\vec{a}$.)

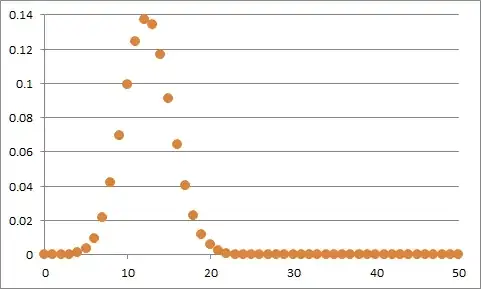

note: Unconditionally, $\vec{b}^{\top}\vec{a} \sim\mathcal{N}\left(\vec{a}^{\top}\vec{a},\vec{a}^{\top}\vec{a}\right)$, and $\vec{b}^{\top}\vec{b} \sim \chi\left(p, \vec{a}^{\top}\vec{a}\right)$, meaning it is a non-central chi-square random variable. Thus $\vec{b}^{\top}\vec{b} - p$ is an unbiased estimate of the mean of $\vec{a}^{\top}\vec{b}$, and of its variance. The latter is somewhat useless, since it can be negative!

I am looking for any and all sensible ways to approach this problem. These can include:

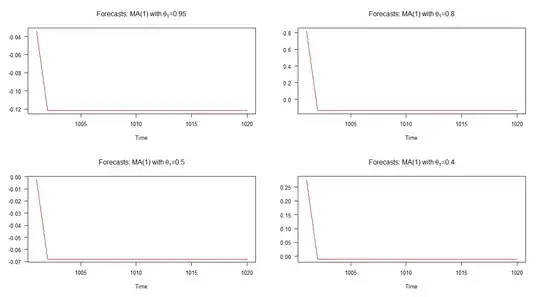

- A proper confidence bound, that is a function $c$ of the observed $\vec{b}$ and known $p$ such that $Pr\left(\vec{b}^{\top}\vec{a} \le c(\vec{b},p,\alpha)\right) = \alpha$ for all $\alpha$ and all $\vec{a}$ such that $\vec{a}^{\top}\vec{a} > 0$. Edit What I mean by this is that, if you fixed $\vec{a}$ and then drew a random $\vec{b}$, the probability that $\vec{b}^{\top}\vec{a} - c\left(\vec{b},p,\alpha\right) \le 0$ is $\alpha$ under repeated draws of $\vec{b}$. So for example, if you fixed $\vec{a}$ and then drew independent $\vec{b_i}$, then the proportion of the $i$ such that $\vec{b_i}^{\top}\vec{a} \le c(\vec{b_i},p,\alpha)$ would approach $\alpha$ as the number of replications goes to $\infty$.

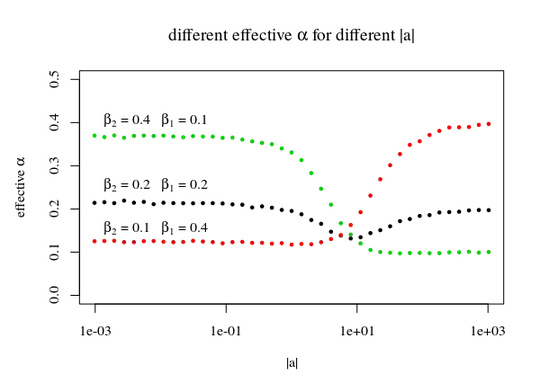

- A confidence bound 'in expectation'. This is a function of the observed $\vec{b}$, and known $p$ and $\alpha$ such that its unconditional expected value is the $\alpha$ quantile of $\vec{b}^{\top}\vec{a}$ for all $\vec{a} : \vec{a}^{\top}\vec{a} > 0$.

- Some kind of Bayesian solution where I can specify a sane prior on $\vec{a}^{\top}\vec{a}$, then, given the observation $\vec{b}$, get a posterior on both $\vec{b}^{\top}\vec{b}$ and $\vec{a}^{\top}\vec{a}$.

edit The original form of this question had the covariance of $\vec{b}$ as $\frac{1}{n}I$, however I believe that w.l.o.g. one can just assume $n=1$, so I have edited out all mention of $n$.