Solution

The transformation

$$(p, x) \to (p, (x-L)/(U-L))$$

converts these points to ones on CDFs for a Beta$(\alpha,\beta)$ distribution. Assuming this has been done (so we don't need to change the notation), the problem is to find $\alpha$ and $\beta$ for which

$$F(\alpha, \beta; x_i) = p_i, i = 1, 2$$

where the Beta CDF equals

$$F(\alpha,\beta; x) = \frac{\Gamma(\alpha+\beta)}{\Gamma(\alpha)\Gamma(\beta)}\int_0^x t^{\alpha-1}(1-t)^{\beta-1} dt.$$

This is a nice function of $\alpha \gt 0$ and $\beta \gt 0$: it is differentiable in those arguments and not too expensive to evaluate. However, there exists no closed-form general solution, so numerical methods must be used.

Implementation Tips

Beware: when both $x_i$ are at the same tail of the distribution, or when either is close to $0$ or $1$, finding a reliable solution can be problematic. Techniques that are helpful in this circumstance are

Reparameterize the distributions to avoid enforcing the "hard" constraints $\alpha\ge 0, \beta \ge 0$. One that works is to use the logarithms of these parameters.

Optimize a measure of the relative error. A good way to do this for a CDF is to use the squared difference of the logits of the values: that is, replace the $p_i$ by $\text{logit}(p_i) = \log(p_i/(1-p_i))$ and fit $\text{logit}(F(\alpha,\beta;x_i))$ to these values by minimizing the sum of squared differences.

Either begin with a very good estimate of the parameters or--as experimentation shows--overestimate them. (This may be less necessary when following guideline #2.)

Examples

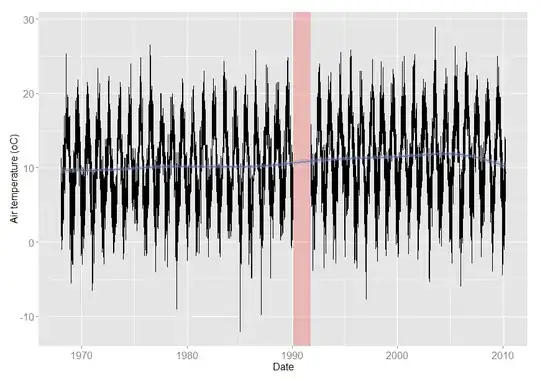

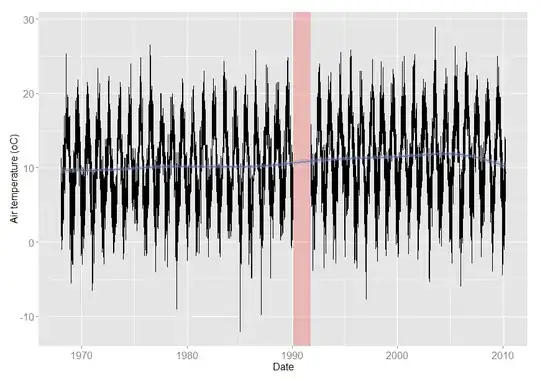

Illustrating these methods is the following sample code using the nlm minimizer in R. It produces perfect fits even in the first case, which is numerically challenging because the $x_i$ are small and far out into the same tail. The output includes plots of the true CDFs (in black) on which are overlaid the fits (in red). The points on the plots show the two $(x_i, p_i)$ pairs.

This solution can fail in more extreme circumstances: obtaining a close estimate of the correct parameters can help assure success.

#

# Logistic transformation of the Beta CDF.

#

f.beta <- function(alpha, beta, x, lower=0, upper=1) {

p <- pbeta((x-lower)/(upper-lower), alpha, beta)

log(p/(1-p))

}

#

# Sums of squares.

#

delta <- function(fit, actual) sum((fit-actual)^2)

#

# The objective function handles the transformed parameters `theta` and

# uses `f.beta` and `delta` to fit the values and measure their discrepancies.

#

objective <- function(theta, x, prob, ...) {

ab <- exp(theta) # Parameters are the *logs* of alpha and beta

fit <- f.beta(ab[1], ab[2], x, ...)

return (delta(fit, prob))

}

#

# Solve two problems.

#

par(mfrow=c(1,2))

alpha <- 15; beta <- 22 # The true parameters

for (x in list(c(1e-3, 2e-3), c(1/3, 2/3))) {

x.p <- f.beta(alpha, beta, x) # The correct values of the p_i

start <- log(c(1e1, 1e1)) # A good guess is useful here

sol <- nlm(objective, start, x=x, prob=x.p, lower=0, upper=1,

typsize=c(1,1), fscale=1e-12, gradtol=1e-12)

parms <- exp(sol$estimate) # Estimates of alpha and beta

#

# Display the actual and estimated values.

#

print(rbind(Actual=c(alpha=alpha, beta=beta), Fit=parms))

#

# Plot the true and estimated CDFs.

#

curve(pbeta(x, alpha, beta), 0, 1, n=1001, lwd=2)

curve(pbeta(x, parms[1], parms[2]), n=1001, add=TRUE, col="Red")

points(x, pbeta(x, alpha, beta))

}