I have always been confused about how to properly interpret PCA results.

My data looks like this and it's a big table with more than 5 million rows and 12 columns.(the first few lines are all 0...) Each column is for an individual which has more than 5 million observations (numbers).

> head(data)

YC1CO YC1LI YC4CO YC4LI YC5CO YC5LI YM1CO YM1LI YM3CO YM3LI

f1 0 0 0 0 0 0 0 0 0 0

f2 0 0 0 0 0 0 0 0 0 0

f3 0 0 0 0 0 0 0 0 0 0

f4 0 0 0 0 0 0 0 0 0 0

f5 0 0 0 0 0 0 0 0 0 0

f6 0 0 0 0 0 0 0 0 0 0

Then I run PCA using prcomp in R:

pca<-prcomp(data,scale=T,center=T)

The outputs are:

pca$rotation:

> pca$rotation

PC1 PC2 PC3 PC4 PC5 PC6

YC1CO 0.2888377 -0.1511474 0.354970405 -0.14922899 0.29263063 -0.42756650

YC1LI 0.2887845 0.2891378 0.006931811 -0.11867753 0.10465221 0.32239652

YC4CO 0.2888937 -0.1073097 0.376083559 -0.16145206 0.28844683 -0.19929480

YC4LI 0.2888576 0.2899107 0.032538093 -0.10721970 0.11537841 0.19513249

YC5CO 0.2885639 -0.2200563 0.393267987 -0.13833481 -0.80160742 0.20303762

YC5LI 0.2887792 0.2926729 0.010423117 -0.11994739 0.12149153 0.31232174

YM1CO 0.2889243 -0.2483682 0.100978896 0.12858598 0.04456687 -0.19313330

YM1LI 0.2891586 0.2571790 -0.112257791 0.05154060 -0.01859997 -0.02233253

YM3CO 0.2872954 -0.5242998 -0.631712144 -0.47494155 0.05150495 0.08749259

YM3LI 0.2891991 0.2441790 -0.131464167 0.06272038 -0.03712138 -0.03534204

YM5CO 0.2881663 -0.3741525 -0.033566125 0.75538412 0.17427960 0.33801997

YM5LI 0.2886363 0.2481463 -0.369342316 0.27066649 -0.33590255 -0.57941556

PC7 PC8 PC9 PC10 PC11

YC1CO 0.658953472 -0.0032299313 0.19565297 0.02889161 0.06028938

YC1LI 0.075935158 0.7256745487 0.10496762 -0.36161999 -0.17252148

YC4CO -0.733333390 0.0315817680 0.26028561 0.06764965 0.05879949

YC4LI 0.050613636 -0.0400665068 -0.25306478 0.74445447 -0.38210226

YC5CO 0.040387219 -0.0106509021 0.07336791 0.04776528 0.03597253

YC5LI 0.040072049 -0.6856737279 0.16012889 -0.41945134 -0.17527656

YM1CO -0.085165645 -0.0130678334 -0.78767928 -0.33407254 -0.22202704

YM1LI -0.004723194 -0.0087215382 -0.16061437 0.06378812 0.58204927

YM3CO -0.003226445 -0.0019793258 0.06649693 0.05308833 0.01803736

YM3LI -0.006287346 -0.0087025271 -0.14028837 0.03396984 0.52810233

YM5CO 0.048983778 0.0009123461 0.21714944 0.10450049 0.01040703

YM5LI -0.081931860 0.0139306658 0.26532068 -0.02864126 -0.34310790

PC12

YC1CO -0.005335094

YC1LI 0.007632148

YC4CO -0.006459107

YC4LI -0.012083181

YC5CO 0.002861339

YC5LI 0.009554891

YM1CO 0.007425773

YM1LI 0.682200634

YM3CO 0.007334849

YM3LI -0.730105933

YM5CO 0.004956218

YM5LI 0.032252049

summary(pca)

> summary(pca)

Importance of components:

PC1 PC2 PC3 PC4 PC5 PC6 PC7

Standard deviation 3.4418 0.20675 0.13369 0.11872 0.11105 0.10690 0.10325

Proportion of Variance 0.9872 0.00356 0.00149 0.00117 0.00103 0.00095 0.00089

Cumulative Proportion 0.9872 0.99072 0.99221 0.99338 0.99441 0.99536 0.99625

PC8 PC9 PC10 PC11 PC12

Standard deviation 0.10054 0.09888 0.09789 0.09215 0.08375

Proportion of Variance 0.00084 0.00081 0.00080 0.00071 0.00058

Cumulative Proportion 0.99709 0.99791 0.99871 0.99942 1.00000

The eigenvalues are:

> pca$sdev^2

[1] 11.845894818 0.042746822 0.017872795 0.014093498 0.012331364

[6] 0.011428471 0.010660422 0.010107398 0.009777983 0.009582267

[11] 0.008490902 0.007013259

I just took the values from pca$rotation above for PC1 and PC2 and plot it.

And the biplot

>biplot(pca)

I have a few specific questions and I would really appreciate it if you can comment to help me understand the plot.

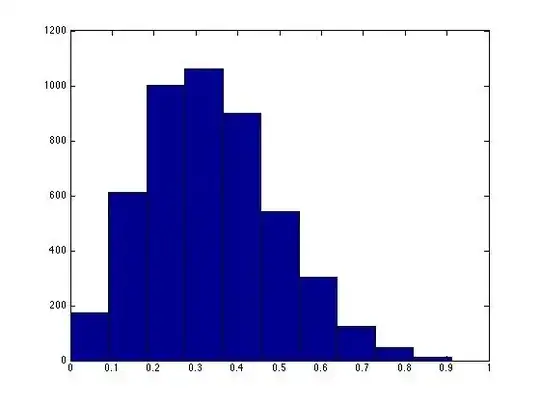

Based on the proportion of variance, I know PC1 explains almost all the variance. I have one question here, does the x axis and y axis values matter at all here? PC1 values are much more closer than those of PC2 values although PC1 is the dominant PC.

Can I see the difference among points YC1CO, YC4CO, YC5CO and YM1CO, YM3CO, YM5CO are what drives PC1?

The initial thought was to show relationships among the 12 individuals (YC1CO, etc) and see if they cluster/separate from each other and if there is some meaningful patterns. That's why I want to plot PC1 and PC2 to show the relative location of 12 individuals. Now I'm confused about how to plot it..

Thanks!