After centering, the two measurements x and −x can be assumed to be independent observations from a Cauchy distribution with probability density function:

$f(x :\theta) = $ $1\over\pi (1+(x-\theta)^2) $ $, -∞ < x < ∞$

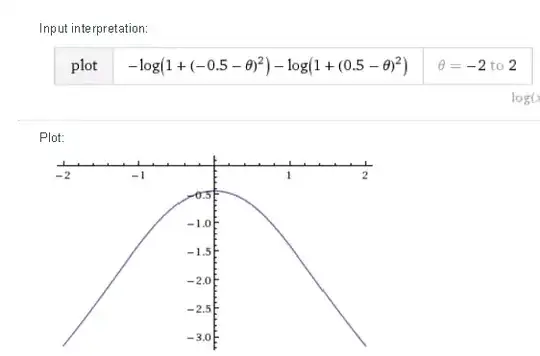

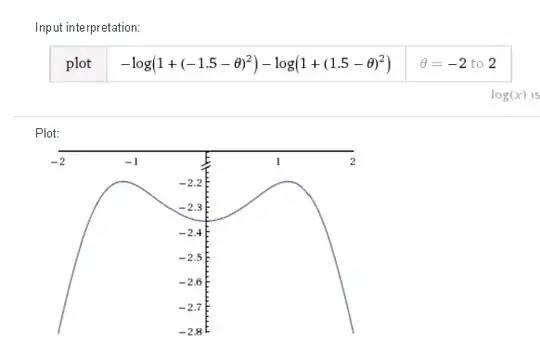

Show that if $x^2≤ 1$ the MLE of $\theta$ is 0, but if $x^2>1$ there are two MLE's of $\theta$, equal to ±$\sqrt {x^2-1}$

I think to find the MLE I have to differentiate the log likelihood:

$dl\over d\theta$ $=\sum $$2(x_i-\theta)\over 1+(x_i-\theta)^2 $ $=$ $2(-x-\theta)\over 1+(-x-\theta)^2 $ + $2(x-\theta)\over 1+(x-\theta)^2 $ $=0$

So,

$2(x-\theta)\over 1+(x-\theta)^2 $ $=$ $2(x+\theta)\over 1+(x-\theta)^2 $

which I then simplified down to

$5x^2 = 3\theta^2+2\theta x+3$

Now I've hit a wall. I've probably gone wrong at some point, but either way I'm not sure how to answer the question. Can anyone help?