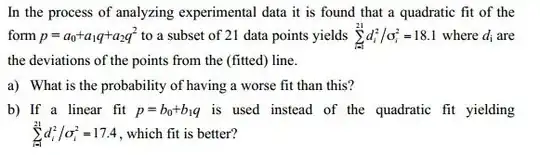

As mentioned in comments, I believe the intent with (a) is that you do a chi-square test.

Let $D_i$ be the random variable, and $d_i$ its observed value

The derivation of a chi-square relies on the assumption that $D_i∼N(0,σ^2_i)$, that is that $D_i/σ_i$ is standard normal. A sum of squares of independent standard normals would be $χ^2$, but you would lose a degree of freedom for each of the estimated parameters.

For part (b), $F$ distributions arise when you take ratios of reduced chi-squares times some common but unknown $σ^2$. You'd use that if you don't know the standard deviation of $D$'s, only the relative sizes of them (i.e. if $σ_i=c_i\sigma$ for known $c_i$). This would be used because taking the ratio cancels out the unknown $σ^2$.

I think in your case, the $\sigma_i$ are completely known, in which case I believe you work similarly to an F-test (as you said), except you know the variance of the residual should be 1 - the difference in sum of squares of scaled residuals (SSE=$\sum_i d_i^2/\sigma_i^2$) between the two models is taken, ($\text{SSE}_\text{linear}-\text{SSE}_\text{quadratic}$) which should have a $\chi^2_1$ distribution.

(You could do an $F$ test, still, however, and if you weren't confident that $d_i/\sigma_i$ had a variance of 1, you probably should. But then the answer for (a) may have a problem.)