Consider the log likelihood of a mixture of Gaussians:

$$l(S_n; \theta) = \sum^n_{t=1}\log f(x^{(t)}|\theta) = \sum^n_{t=1}\log\left\{\sum^k_{i=1}p_i f(x^{(t)}|\mu^{(i)}, \sigma^2_i)\right\}$$

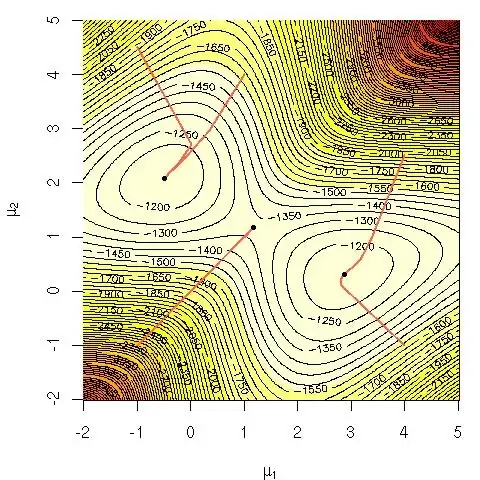

I was wondering why it was computationally hard to maximize that equation directly? I was looking for either a clear solid intuition on why it should be obvious that its hard or maybe a more rigorous explanation of why its hard. Is this problem NP-complete or do we just not know how to solve it yet? Is this the reason we resort to use the EM (expectation-maximization) algorithm?

Notation:

$S_n$ = training data.

$x^{(t)}$ = data point.

$\theta$ = the set of parameters specifying the Gaussian, theirs means, standard deviations and the probability of generating a point from each cluster/class/Gaussian.

$p_i$ = the probability of generating a point from cluster/class/Gaussian i.