I have a time series where I want to fit a piecewise regression equation. Now the problem occurs when I try to fit equations of different degree in different segments of the series. Please provide me with citations to examples dealing with the procedure of the above problem.

-

What is the 'problem' you refer to? How have you implemented it? I'm curious about why you require citations; the problem appears to be reasonably straightforward, as long as you specify the behaviour at the segment-boundaries. (Is continuity required? If so is there only continuity? Or if there's a higher-than-linear polynomial either side of the join does continuity extend to some order of derivatives?) – Glen_b Apr 13 '14 at 22:32

-

@Glen_b's answer is starting to look like he will be guiding you towards [splines](http://en.wikipedia.org/wiki/Spline_(mathematics)) so I thought I'd give you a headstart :) – bdeonovic Apr 14 '14 at 01:18

-

@Benjamin I might head that way, if the OP's responses point that direction; at the moment I can't tell. – Glen_b Apr 14 '14 at 01:39

-

@Glen_b continuity is required. Also, I can only fit linear piecewise regressions to the segments but unable to fit the non-linear piecewise regressions in R. So help me please. – user43722 Apr 14 '14 at 09:04

-

I found your last comment very confusing. What's the simplest thing in what you want that you can't do? What is it *exactly* that you want to achieve? – Glen_b Apr 14 '14 at 09:50

-

In particular, it's not clear what you mean by 'non-linear piecewise regressions' if you can only fit linear terms. – Glen_b Apr 14 '14 at 09:59

-

@Glen_b let's clear the confusions with an example. Suppose I want to divide my time series data into 3 segments viz. Segment1, Segment2 and segment 3 and then want to fit trend equations of degree 1, degree 2 and degree 3 to the segments respectively. Currently, I am able to fit only straight lines to those segments but unable to tackle the problems such as above. Also continuity is required at the segment-boundaries. – user43722 Apr 14 '14 at 14:14

-

Thanks, I have it now. You'd probably have made it easier to split this question into these two: (1) *How do I fit a quadratic or cubic regression model?* then (2) *Given I know how to fit polynomials, how to I make piecewise functions from them of varying degree continuous across the join points?* ... But anyway, I'll come back with an answer, today (my time) if there's time. – Glen_b Apr 14 '14 at 20:30

1 Answers

So the question really has two parts:

1) How to fit polynomials

2) How to join polynomial segments in such a way as to make them continuous at join points

1) How to fit polynomials

The "easy" way is simply to put $1,x,x^2,x^3$ etc as predictors in the regression equation.

For example in R, one might use lm( y ~ x + I(x^2) ) or even

x2 <- x^2

lm( y ~ x + x2)

to fit a quadratic.

However, care is required, because as $x$ becomes large in magnitude, the powers of $x$ all become more and more correlated and multicollinearity becomes a problem.

A simple approach that helps quite well for low order polynomials is to center the x's (and possibly, scale them) before taking powers:

for example in R, one might use lm( y ~ (x-k) + I((x-k)^2)+ I((x-k)^3) ) or even

x1 <- x-k

x2 <- x1^2

x3 <- x1^3

lm( y ~ x1 + x2 + x3)

where $k$ would often be chosen to be a value close to the mean of $x$.

(The shift on the linear term isn't strictly necessary, but it's useful for the next question.)

A more stable approach is to use orthogonal polynomials. In R: lm( y ~ poly(x,2) ) but that doesn't as directly suit our purposes here.

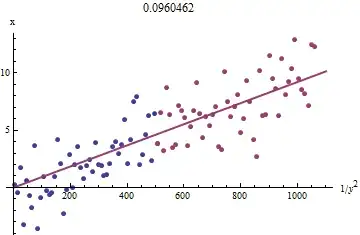

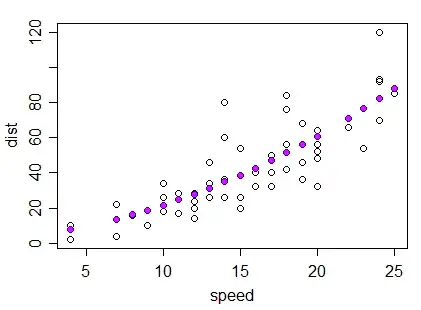

So here's an example fit of a quadratic in R:

carfit <- lm(dist~speed+I(speed^2),cars)

plot(dist~speed,cars)

points(cars$speed,fitted(carfit),col="magenta",pch=16)

and here's an example of a fit with the x shifted to be centered near the mean of x:

x1 <- cars$speed-15

x2 <- x1^2

carfit2 <- lm(dist~x1+x2,cars)

plot(dist~speed,cars)

points(cars$speed,fitted(carfit2),col="blue",pch=16)

points(cars$speed,fitted(carfit),col="magenta",pch=16,cex=0.7)

Here the second fit is in blue and the original fit is in magenta over the top (drawn a little smaller so you can see a little of the blue). As you see, the fits are coincident.

2) How to join polynomial segments in such a way as to make them continuous at join points

Here, we do something with that "shift" of the various terms that in (1) was used for centering.

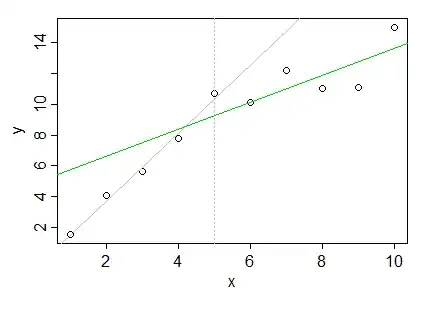

First let's do a simple case. Imagine I have just two segments and I am just fitting straight lines (I realize you can do this case but it's the basis for the more complex ones).

a) No attempt to make them join at a particular x-value:

Here you just fit each regression on its own. The two lines will cross somewhere, but they won't be continuous at your specified join point.

Example: Here's some x and y values:

x y

1 1.540185

2 4.051166

3 5.621000

4 7.752237

5 10.700486

6 10.103224

7 12.150661

8 10.982853

9 11.108116

10 14.993672

The segments are $x\leq 5$ and $x>5$, say.

If we fit lines to the data in those segments, we get:

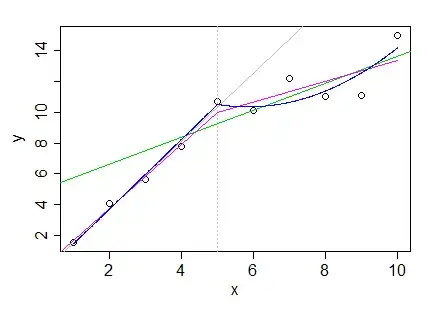

Which we see is discontinuous at x=5 and actually crosses way back near x=4. In this case we could improve things slightly by including the point at x=5 in both, but it doesn't actually solve the underlying problem.

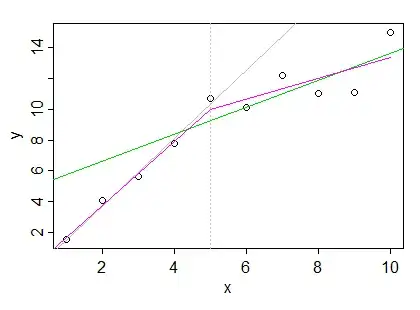

2) continuous segments joining at $x=k$.

The easiest way to get the two to meet at 5 is to put all ten points into one regression with two predictors, one which is $x$ and the second which leaves the line unaltered in the left half, but changes it after 5. We do that by centering a copy of x at 5, and then zeroing out its left half (which we will denote by $(x-5)_+$, the "$+$" indicating that we only retain the positive part of $x-5$, and otherwise set it to 0. This will make the fit from the second predictor 0 in the left half, and then linearly increasing from 0 in the right half:

x (x-5)+

1 0

2 0

3 0

4 0

5 0

6 1

7 2

8 3

9 4

10 5

We then fit a multiple linear regression with both predictors, which gives the segmented fit:

This is called a linear spline fit with a knot at 5.

If you want continuous and smooth (continuous first and second derivatives), you should investigate cubic regression splines. They work very much in this vein and are widely used.

c) polynomial segments

Let's start with a simple case: linear in the left half, quadratic in the right half. Here we can just add an additional term for the quadratic in the right half, $((x-5)_+)^2$. The "+" zeroes it out below 5, so there's still only a line in the left half:

x (x-5)+ ((x-5)+)^2

1 0 0

2 0 0

3 0 0

4 0 0

5 0 0

6 1 1

7 2 4

8 3 9

9 4 16

10 5 25

As you see, linear on the left, quadratic on the right. You can as easily add more quadratic segments to the right using the same trick (by replacing the '5' knot in $(x-5)_+$ with whatever the next knot-value is).

Problem: It's a bit tricky dropping down a degree to the right, because you need to impose a constraint. If your degree is monotonic non-increasing across segments (that is, only goes down you can do it as above by running "backwards" (zero out to the right of the knot and then add terms as required to the left).

If you need the degree to step up and down in any fashion, in the simpler cases you can just impose the required constraints algebraically and calculate the required predictors (if you have a regression function that handles constraints you could impose them that way and save some effort). If you have a lot of predictors, probably the best way to approach it is to modify the approach of B-splines. There are a lot of possible things you might try to do, so it's a bit hard to anticipate.

Hopefully this is sufficient to get you started, anyway.

Another possible shortcut occurs to me that might work in some cases, which is to use a sequence of natural splines (or more generally, a modified version of the approach of natural splines). For example, natural cubic splines reduce the degree to linear after the last knot, so it may be possible to string several natural splines to together over disjoint subsets in such a way that transitions between cubic spline and linear spline sections as smoothly as possible.

- 257,508

- 32

- 553

- 939

-

Can you please give some more hints about the construction of R codes on the process mentioned by you!! – user43722 Apr 15 '14 at 16:45

-

If you have a specific question relating to coding it I may be able to answer it, but this question now sounds an awful lot like a homework question. – Glen_b Apr 15 '14 at 19:35

-

-

[B-splines](http://en.wikipedia.org/wiki/B-spline) are splines modified to have local-effects, as opposed to the ordinary splines I discussed in my answer, which affect everything after the knot (or before, if you flip them around). By contrast B-splines only affect the fit over the next few knots. This may make it somewhat easier to do odd things like change degree of fit up and down, since the higher order terms will die out (or it may not help that much, since you still have effects over several knots). – Glen_b Apr 19 '14 at 01:50

-

when we use ns() in R to fit a natural cubic spline on a (x,y), it provides us with a "B-spline Basis Matrix" of dimension c(length(x), df). I just want to know why we need this matrix?? Also, when we fit a linear model lm(y~ns(x,df=5),data), say, and use "summary" of the fit, it prints the values of 6 coefficients including the value of intercept. Can you please explain me about those coefficients? – user43722 Apr 19 '14 at 17:49

-

This sounds like a whole (and quite broad) new question, one about how B-splines work. – Glen_b Apr 19 '14 at 17:51

-

-

You'd be best posting a question on regression B-splines - there are surely lots of people more knowledgeable than me on the topic. But I wouldn't tackle that topic unless you're familiar with (say) cubic regression splines already. – Glen_b Apr 22 '14 at 07:40

-

You might try [these notes](http://www.ulb.ac.be/soco/statrope/cours/stat-s-404/notes/SplineReg.pdf) in the meantime. – Glen_b Apr 22 '14 at 07:59

-

When I use the ns(), a few of the B-spline basis functions yield negative values, but whenever I use bs() on the same data sets, it produces positive values of basis functions??? Why do we get such a difference?? – user43722 Apr 25 '14 at 21:47

-

Two reasons: (1) because different basis functions represent functions differently, even if they describe the same fit; (2) ns and bs also describe somewhat different fits in general. Basically, it's because B-splines are just set up that way. – Glen_b Apr 25 '14 at 23:11

-

I think if you use the arguments to set each fit up the right way to make their fits correspond, you should be able to get the same fit though. Representations of spline fits aren't unique (indeed, representations just of ordinary polynomials aren't unique either). – Glen_b Apr 25 '14 at 23:23

-

How do we know what is the total number of basis functions is required?? Although it depends on the number of control points, but how do we get that number?? – user43722 Apr 27 '14 at 15:00

-

That depends on what you need it to do. This is not the sort of thing that can be answered in comments. It might be a good question on its own. – Glen_b Apr 27 '14 at 15:04

-

@Glen_b-ReinstateMonica thank you for this answer. I have only just started learning about splines, and understand neither heads nor tails of what is happening when I read the textbook. Your answer is a good start! Thank you :) – gvij Jan 06 '20 at 20:45