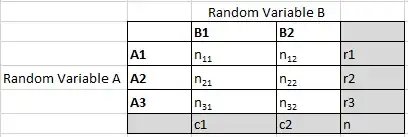

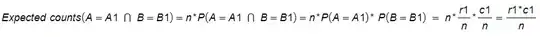

You may want to calculate the independence between two variables, A and B (test of independence) or if the distribution of A given B=B1 (first column) fits the distribution of A given B=B2 (second column). That is if P(A|B=B1)=P(A|B=B2). I'm taking as example the data table posted by @ColorStatistics in her answer.

The two calculations are slightly different, the test of independence takes into account the differences of both distributions with the expected counts, so you have more terms but smaller (the expected counts are "in between" the observed values), the goodness of fit test takes into account the differences of the first distribution from the other (the expected counts are the values of the second distribution), so you have less terms but bigger.

The two methods tend to be same if one subtotal of B is much greater than the other and accounts for most of the elements, that is the B2 elements are very slightly influenced by the exctraction of the B1 elements (c2>>c1 and c2~N). In this case the expected counts for the B2 column are almost equal to their values. So computing the difference with the expected counts (test of independence) is almost the same as computing the difference with the B2 column (goodness of fit test).