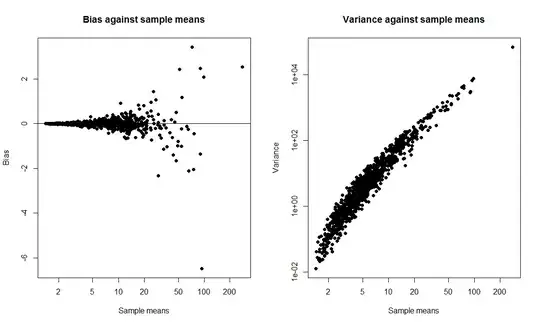

I wanted to do a class demonstration where I compare a t-interval to a bootstrap interval and calculate the coverage probability of both. I wanted the data to come from a skewed distribution so I chose to generate the data as exp(rnorm(10, 0, 2)) + 1, a sample of size 10 from a shifted lognormal. I wrote a script to draw 1000 samples and, for each sample, calculate both a 95% t-interval and a 95% bootstrap percentile interval based on 1000 replicates.

When I run the script, both methods give very similar intervals and both have coverage probability of 50-60%. I was surprised because I thought the bootstrap interval would be better.

My question is, have I

- made a mistake in the code?

- made a mistake in calculating the intervals?

- made a mistake by expecting the bootstrap interval to have better coverage properties?

Also, is there a way to construct a more reliable CI in this situation?

tCI.total <- 0

bootCI.total <- 0

m <- 10 # sample size

true.mean <- exp(2) + 1

for (i in 1:1000){

samp <- exp(rnorm(m,0,2)) + 1

tCI <- mean(samp) + c(1,-1)*qt(0.025,df=9)*sd(samp)/sqrt(10)

boot.means <- rep(0,1000)

for (j in 1:1000) boot.means[j] <- mean(sample(samp,m,replace=T))

bootCI <- sort(boot.means)[c(0.025*length(boot.means), 0.975*length(boot.means))]

if (true.mean > min(tCI) & true.mean < max(tCI)) tCI.total <- tCI.total + 1

if (true.mean > min(bootCI) & true.mean < max(bootCI)) bootCI.total <- bootCI.total + 1

}

tCI.total/1000 # estimate of t interval coverage probability

bootCI.total/1000 # estimate of bootstrap interval coverage probability