The standard error of the intercept term ($\hat{\beta}_0$) in $y=\beta_1x+\beta_0+\varepsilon$ is given by $$SE(\hat{\beta}_0)^2 = \sigma^2\left[\frac{1}{n}+\frac{\bar{x}^2}{\sum_{i=1}^n(x_i-\bar{x})^2}\right]$$ where $\bar{x}$ is the mean of the $x_i$'s.

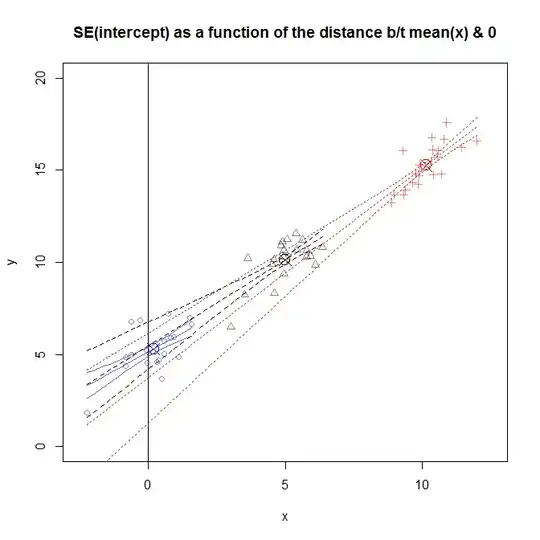

From what I understand, the SE quantifies your uncertainty- for instance, in 95% of the samples, the interval $[\hat{\beta}_0-2SE,\hat{\beta}_0+2SE]$ will contain the true $\beta_0$. I fail to understand how the SE, a measure of uncertainty, increases with $\bar{x}$. If I simply shift my data, so that $\bar{x}=0$, my uncertainty goes down? That seems unreasonable.

An analogous interpretation is - in the uncentered version of my data, $\hat{\beta}_0$ corresponds to my prediction at $x=0$, while in the centered data, $\hat{\beta}_0$ corresponds to my prediction at $x=\bar{x}$. So does this then mean that my uncertainty about my prediction at $x=0$ is greater than my uncertainty about my prediction at $x=\bar{x}$? That seems unreasonable too, the error $\epsilon$ has the same variance for all values of $x$, so my uncertainty in my predicted values should be the same for all $x$.

There are gaps in my understanding I'm sure. Could somebody help me understand what's going on?