We have a bivariate normal process where $X \sim N(\mu_x, \sigma), \, Y \sim N(\mu_y, \sigma)$, with no covariance.

$(\mu_x, \mu_y)$ are unknown.

(For convenience we can assert that $\sigma = 1$, or that we have a good estimate for its value.)

We are trying to characterize the distance between our sample center and the true center $(\mu_x, \mu_y)$ as a function of shots sampled n.

Because we don't care about the location of the true center, only our distance from it, we assert that $\mu_x = \mu_y = 0$ and look at the random variable $R(n) = \sqrt{\overline{x_i}^2 + \overline{y_i}^2}$ -- the distance between sample center and true center.

Question: How can we characterize the confidence interval of R(n)?

Note that $R(n) \ge 0$ and $E[R(n)] \to 0$ as $n \to \infty$

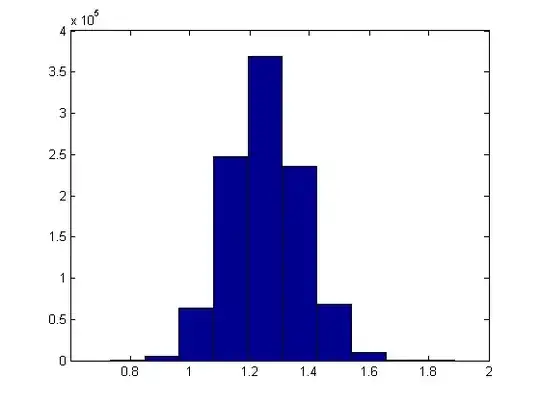

I have Monte Carlo estimates of both the mean and standard deviation of R(n) for small n.

I want to calculate confidence levels and intervals for R(n). I.e., given n and confidence level 90% what is the confidence interval of a sample R(n) about its population mean?

I don't believe this is amenable to CLT analysis because the values are bounded at 0.

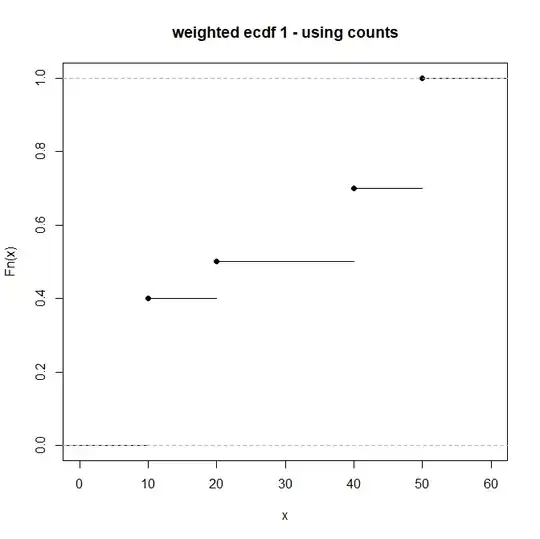

I suppose I could Monte Carlo the edf since I'm only interested in $n \in [2, 30]$, and the edf must scale with $\sigma$ or $\sigma^2$. But first I want to make sure I'm not missing something obvious or a known closed-form expression.