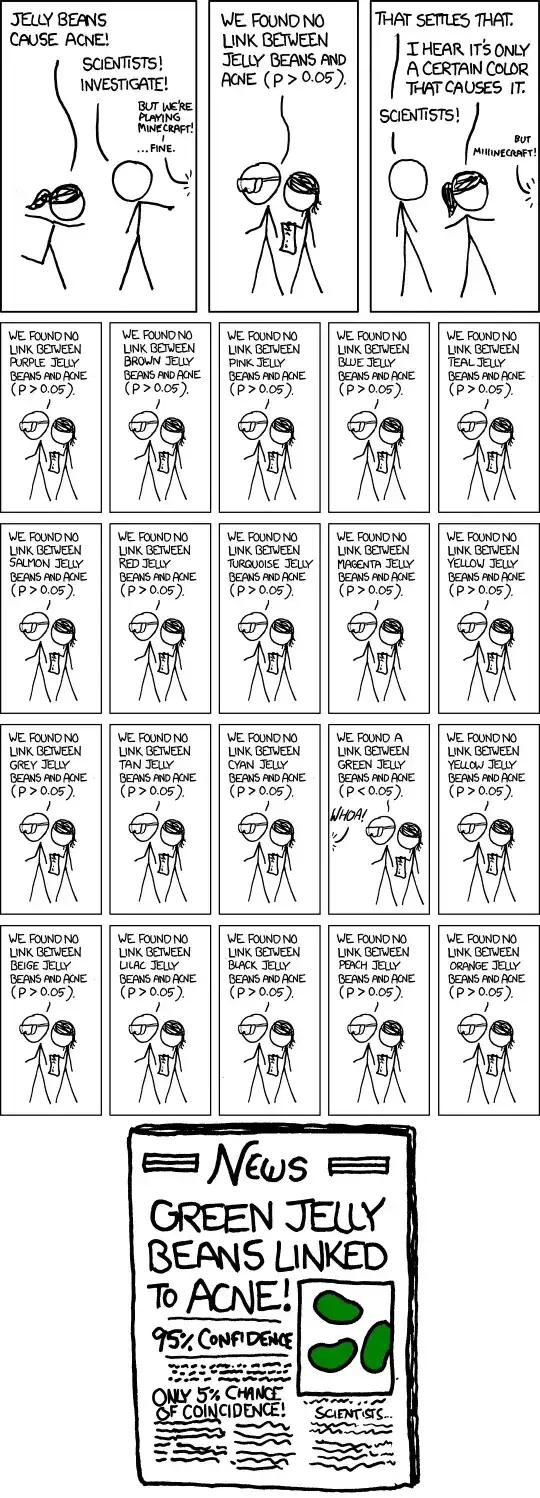

Humor is a very personal thing - some people will find it amusing, but it may not be funny to everyone - and attempts to explain what makes something funny often fail to convey the funny, even if they explain the underlying point. Indeed not all xkcd's are even intended to be actually funny. Many do, however make important points in a way that's thought provoking, and at least sometimes they're amusing while doing that. (I personally find it funny, but I find it hard to clearly explain what, exactly, makes it funny to me. I think partly it's the recognition of the way that a doubtful, or even dubious result turns into a media circus (on which see also this PhD comic), and perhaps partly the recognition of the way some research may actually be done - if usually not consciously.)

However, one can appreciate the point whether or not it tickles your funnybone.

The point is about doing multiple hypothesis tests at some moderate significance level like 5%, and then publicizing the one that came out significant. Of course, if you do 20 such tests when there's really nothing of any importance going on, the expected number of those tests to give a significant result is 1. Doing a rough in-head approximation for $n$ tests at significance level $\frac{1}{n}$, there's roughly a 37% chance of no significant result, roughly 37% chance of one and roughly 26% chance of more than one (I just checked the exact answers; they're close enough to that).

In the comic, Randall depicted 20 tests, so this is no doubt his point (that you expect to get one significant even when there's nothing going on). The fictional newspaper article even emphasizes the problem with the subhead "Only 5% chance of coincidence!". (If the one test that ended up in the papers was the only one done, that might be the case.)

Of course, there's also the subtler issue that an individual researcher may behave much more reasonably, but the problem of rampant publicizing of false positives still occurs. Let's say that these researchers only do 5 tests, each at the 1% level, so their overall chance of discovering a bogus result like that is only about five percent.

So far so good. But now imagine there are 20 such research groups, each testing whichever random subset of colors they think they have reason to try. Or 100 research groups... what chance of a headline like the one in the comic now?

So more broadly, the comic may be referencing publication bias more generally. If only significant results are trumpeted, we won't hear about the dozens of groups that found nothing for green jellybeans, only the one that did.

Indeed, that's one of the major points being made in this article, which has been in the news in the last few months (e.g. here, even though it's a 2005 article).

A response to that article emphasizes the need for replication. Note that if there were to be several replications of the study that was published, the "Green jellybeans linked to acne" result would be very unlikely to stand.

(And indeed, the hover text for the comic makes a clever reference to the same point.)