Before you set up your analysis, keep in mind the reality of what the current situation involves.

This meltdown was not directly caused by the earthquake or the tsunami. It was because of a lack of back-up power. If they had enough back-up power, regardless of the earthquake/tsunami, they could have kept the cooling water running, and none of the meltdowns would have happened. The plant would probably be back up and running by now.

Japan, for whatever reason, has two electrical frequencies (50 Hz and 60 Hz). And, you can't run a 50 Hz motor at 60 Hz or vice versa. So, whatever frequency the plant was using/providing is the frequency they need to power up. "U.S. type" equipment runs at 60 Hz and "European type" equipment runs at 50 Hz, so in providing an alternative power source, keep that in mind.

Next, that plant is in a fairly remote mountainous area. To supply external power requires a LONG power line from another area (requiring days/weeks to build) or large gasoline/diesel driven generators. Those generators are heavy enough that flying them in with a helicopter is not an option. Trucking them in may also be a problem due to the roads being blocked from the earthquake/tsunami. Bringing them in by ship is an option, but it also takes days/weeks.

The bottom line is, the risk analysis for this plant comes down to a lack of SEVERAL (not just one or two) layers of back-ups. And, because this reactor is an "active design", which means it requires power to stay safe, those layers are not a luxury, they're required.

This is an old plant. A new plant would not be designed this way.

Edit (03/19/2011) ==============================================

J Presley: To answer your question requires a short explanation of terms.

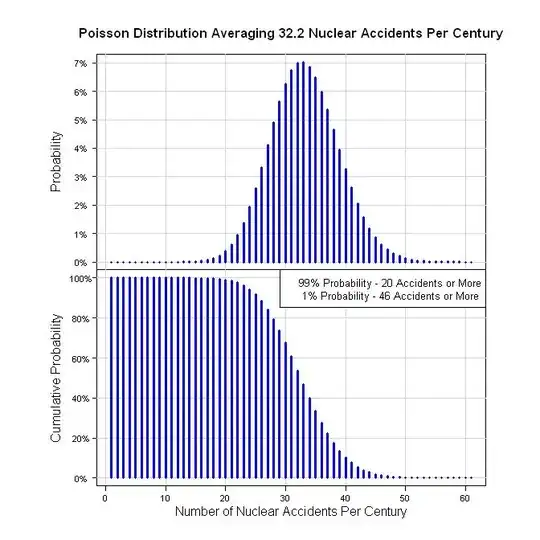

As I said in my comment, to me, this is a matter of "when", not "if", and as a crude model, I suggested the Poisson Distribution/Process. The Poisson Process is a series of events that happen at an average rate over time (or space, or some other measure). These events are independent of each other and random (no patterns). The events happen one at a time (2 or more events don't happen at the exact same time). It is basically a binomial situation ("event" or "no event") where the probability that the event will happen is relatively small. Here are some links:

http://en.wikipedia.org/wiki/Poisson_process

http://en.wikipedia.org/wiki/Poisson_distribution

Next, the data. Here's a list of nuclear accidents since 1952 with the INES Level:

http://en.wikipedia.org/wiki/Nuclear_and_radiation_accidents

I count 19 accidents, 9 state an INES Level. For those without an INES level, all I can do is assume the level is below Level 1, so I'll assign them Level 0.

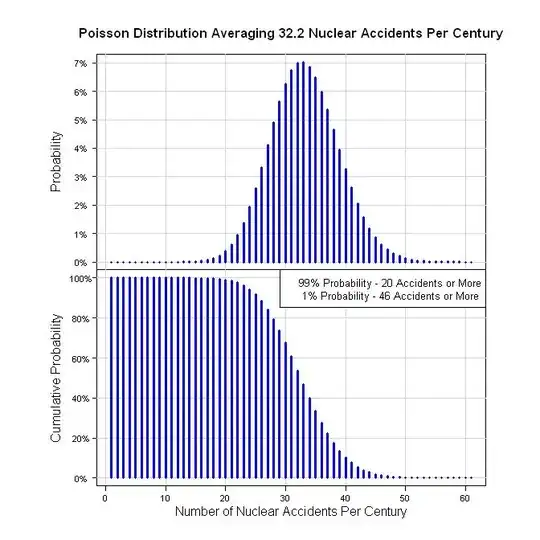

So, one way to quantify this is 19 accidents in 59 years (59 = 2011 -1952). That's 19/59 = 0.322 acc/yr. In terms of a century, that's 32.2 accidents per 100 years. Assuming a Poisson Process gives the following graphs.

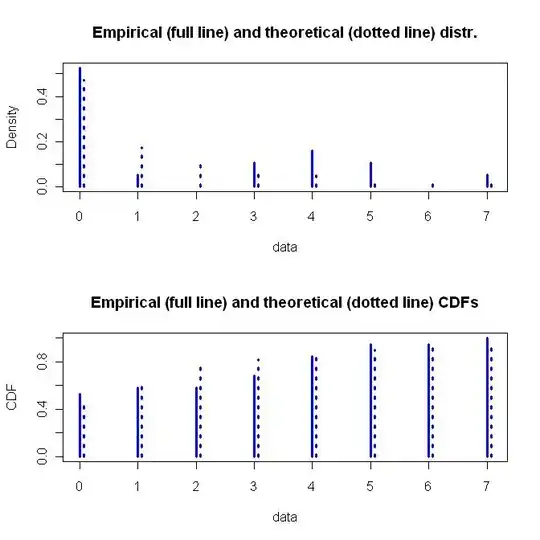

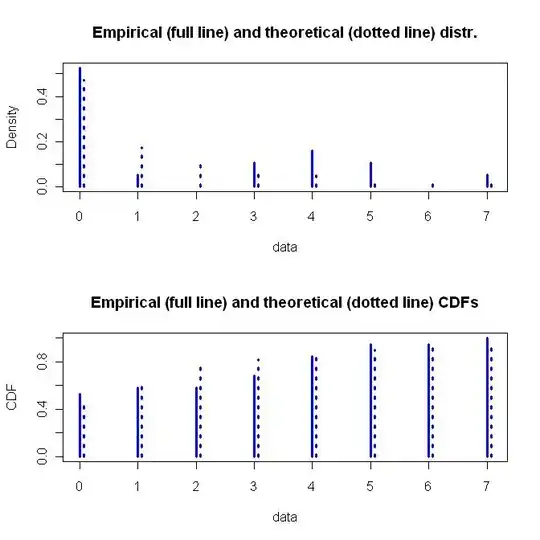

Originally, I suggested a Lognormal, Gamma, or Exponential Distribution for the severity of the accidents. However, since the INES Levels are given as discrete values, the distribution would need to be discrete. I would suggest either the Geometric or Negative Binomial Distribution. Here are their descriptions:

http://en.wikipedia.org/wiki/Negative_binomial_distribution

http://en.wikipedia.org/wiki/Geometric_distribution

They both fit the data about the same, which is not very well (lots of Level 0's, one Level 1, zero Level 2's, etc).

Fit for Negative Binomial Distribution

Fitting of the distribution ' nbinom ' by maximum likelihood

Parameters :

estimate Std. Error

size 0.460949 0.2583457

mu 1.894553 0.7137625

Loglikelihood: -34.57827 AIC: 73.15655 BIC: 75.04543

Correlation matrix:

size mu

size 1.0000000000 0.0001159958

mu 0.0001159958 1.0000000000

#====================

Fit for Geometric Distribution

Fitting of the distribution ' geom ' by maximum likelihood

Parameters :

estimate Std. Error

prob 0.3454545 0.0641182

Loglikelihood: -35.4523 AIC: 72.9046 BIC: 73.84904

The Geometric Distribution is a simple one parameter function while the Negative Binomial Distribution is a more flexible two parameter function. I would go for the flexibility, plus the underlying assumptions of how the Negative Binomial Distribution was derived. Below is a graph of the fitted Negative Binomial Distribution.

Below is the code for all this stuff. If anyone finds a problem with my assumptions or coding, don't be afraid to point it out. I checked through the results, but I didn't have enough time to really chew on this.

library(fitdistrplus)

#Generate the data for the Poisson plots

x <- dpois(0:60, 32.2)

y <- ppois(0:60, 32.2, lower.tail = FALSE)

#Cram the Poisson Graphs into one plot

par(pty="m", plt=c(0.1, 1, 0, 1), omd=c(0.1,0.9,0.1,0.9))

par(mfrow = c(2, 1))

#Plot the Probability Graph

plot(x, type="n", main="", xlab="", ylab="", xaxt="n", yaxt="n")

mtext(side=3, line=1, "Poisson Distribution Averaging 32.2 Nuclear Accidents Per Century", cex=1.1, font=2)

xaxisdat <- seq(0, 60, 10)

pardat <- par()

yaxisdat <- seq(pardat$yaxp[1], pardat$yaxp[2], (pardat$yaxp[2]-pardat$yaxp[1])/pardat$yaxp[3])

axis(2, at=yaxisdat, labels=paste(100*yaxisdat, "%", sep=""), las=2, padj=0.5, cex.axis=0.7, hadj=0.5, tcl=-0.3)

mtext("Probability", 2, line=2.3)

abline(h=yaxisdat, col="lightgray")

abline(v=xaxisdat, col="lightgray")

lines(x, type="h", lwd=3, col="blue")

#Plot the Cumulative Probability Graph

plot(y, type="n", main="", xlab="", ylab="", xaxt="n", yaxt="n")

pardat <- par()

yaxisdat <- seq(pardat$yaxp[1], pardat$yaxp[2], (pardat$yaxp[2]-pardat$yaxp[1])/pardat$yaxp[3])

axis(2, at=yaxisdat, labels=paste(100*yaxisdat, "%", sep=""), las=2, padj=0.5, cex.axis=0.7, hadj=0.5, tcl=-0.3)

mtext("Cumulative Probability", 2, line=2.3)

abline(h=yaxisdat, col="lightgray")

abline(v=xaxisdat, col="lightgray")

lines(y, type="h", lwd=3, col="blue")

axis(1, at=xaxisdat, padj=-2, cex.axis=0.7, hadj=0.5, tcl=-0.3)

mtext("Number of Nuclear Accidents Per Century", 1, line=1)

legend("topright", legend=c("99% Probability - 20 Accidents or More", " 1% Probability - 46 Accidents or More"), bg="white", cex=0.8)

#Calculate the 1% and 99% values

qpois(0.01, 32.2, lower.tail = FALSE)

qpois(0.99, 32.2, lower.tail = FALSE)

#Fit the Severity Data

z <- c(rep(0,10), 1, rep(3,2), rep(4,3), rep(5,2), 7)

zdis <- fitdist(z, "nbinom")

plot(zdis, lwd=3, col="blue")

summary(zdis)

Edit (03/20/2011) ======================================================

J Presley: I'm sorry I couldn't finish this up yesterday. You know how it is on weekends, lots of duties.

The last step in this process is to assemble a simulation using the Poisson Distribution to determine when an event happens, and then the Negative Binomial Distribution to determine the severity of the event. You might run 1000 sets of "century chunks" to generate the 8 probability distributions for Level 0 through Level 7 events. If I get the time, I might run the simulation, but for now, the description will have to do. Maybe someone reading this stuff will run it. After that is done, you'll have a "base case" where all of the events are assumed to be INDEPENDENT.

Obviously, the next step is to relax one or more of the above assumptions. An easy place to start is with the Poisson Distribution. It assumes that all events are 100% independent. You can change that in all sorts of ways. Here are some links to Non-homogeneous Poisson Distributions:

http://www.math.wm.edu/~leemis/icrsa03.pdf

http://filebox.vt.edu/users/pasupath/papers/nonhompoisson_streams.pdf

The same idea goes for the Negative Binomial Distribution. This combination will lead you down all sorts of paths. Here are some examples:

http://surveillance.r-forge.r-project.org/

http://www.m-hikari.com/ijcms-2010/45-48-2010/buligaIJCMS45-48-2010.pdf

http://www.michaeltanphd.com/evtrm.pdf

The bottom line is, you asked a question where the answer depends on how far you want to take it. My guess is, someone, somewhere will be commissioned to generate "an answer" and will be surprised at how long it takes to do the work.

Edit (03/21/2011) ====================================================

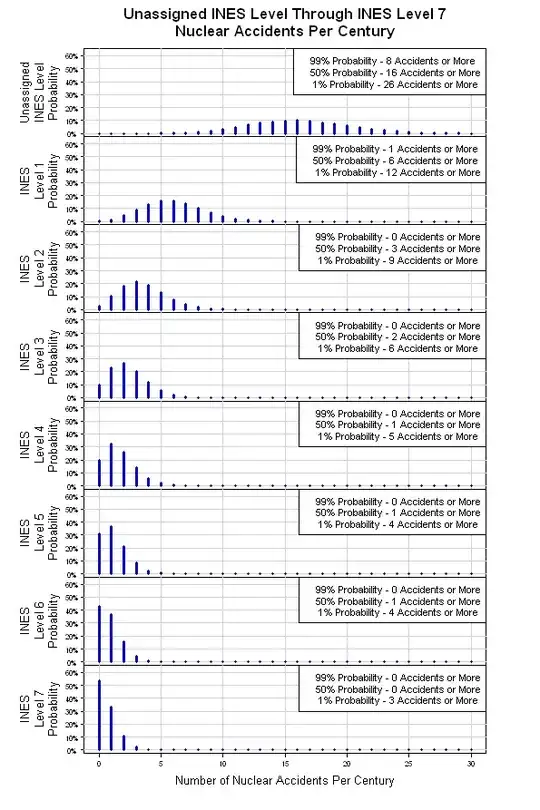

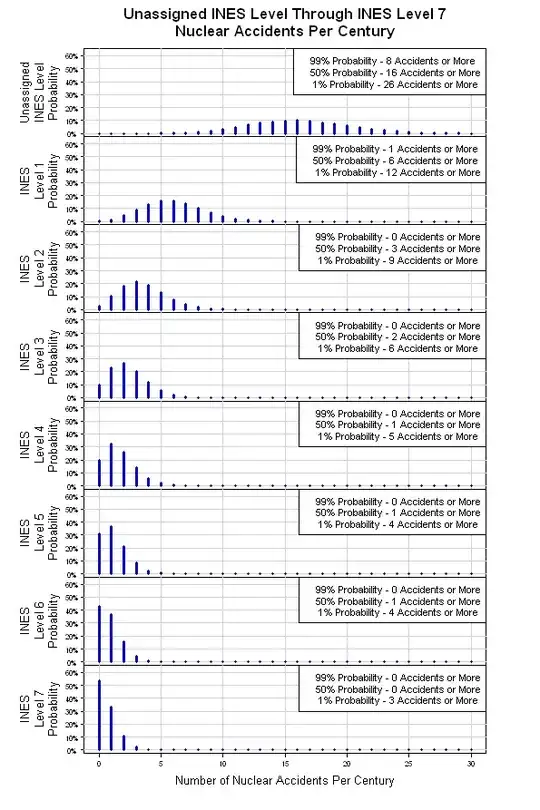

I had a chance to slap together the above mentioned simulation. The results are shown below. From the original Poisson Distribution, the simulation provides eight Poisson Distributions, one for each INES Level. As the severity level rises (INES Level Number rises), the number of expected events per century drops. This may be a crude model, but it's a reasonable place to start.