These wise gentlemen,

Kotz, S., Kozubowski, T. J., & Podgorski, K. (2001). The Laplace Distribution and Generalizations: A Revisit with Applications to Communications, Economics, Engineering, and Finance (No. 183). Springer.

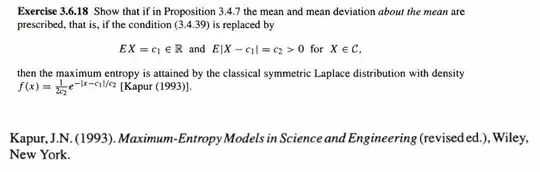

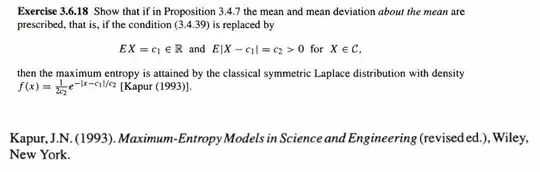

challenge us with an exercise:

The proof can follow the Information-Theoretic proof that the Normal is maximum entropy for given mean and variance. Specifically:

Let $f(x)$ be the above Laplace density, and let $g(x)$ be any other density, but having the same mean and mean absolute deviation.

This means that the following equality holds:

$$E_g(|X-c_1|)=\int g(x)|x-c_1|dx=c_2 =\int f(x)|x-c_1|dx =E_f(|X-c_1|)\qquad [1]$$

Now consider the Kullback-Leibler Divergence of the two densities:

$$0\le D_{KL}(g||f) = \int g(x)\ln\left(\frac {g(x)}{f(x)}\right)dx = \int g(x)\ln g(x)dx -\int g(x)\ln f(x)dx \qquad [2]$$

The first integral is the negative of the (differential) entropy of $g$, denote it $-h(g)$. The second integral is (writing explicitly the Laplacian pdf)

$$\int g(x)\ln[f(x)]dx = \int g(x)\ln\left[\frac{1}{2c_2}\exp\left\{-\frac 1{c_2} |x-c_1|\right\}\right]dx $$

$$=\ln\left[\frac{1}{2c_2}\right]\int g(x)dx- \frac 1{c_2}\int g(x)|x-c_1|dx $$

The first integral integrates to unity, and using also eq. $[1]$ we obtain

$$\int g(x)\ln[f(x)]dx = -\ln\left[2c_2\right] - \frac 1{c_2}\int f(x)|x-c_1|dx = -(\ln\left[2c_2\right] +1) $$

But this is the negative of the differential entropy of the Laplacian, denote it $-h(f)$.

Inserting these results into eq. $[2]$ we have

$$0\le D(g||f) = -h(g) - (-h(f)) \Rightarrow h(g) \le h(f)$$

Since $g$ was arbitrary, this proves that the above Laplacian density is maximum entropy among all distributions with the above prescriptions.