What advantage does creating the Laplacian Matrix from the Similarity Matrix bring us in spectral clustering? Why do we create it?

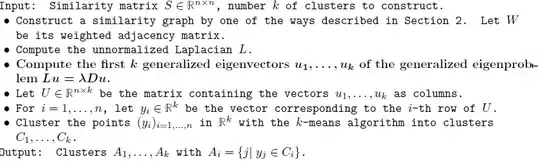

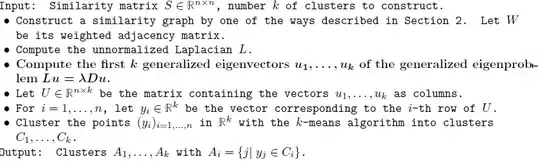

Here is the algorithm:

- A Tutorial on Spectral Clustering (pp.5-6)

What advantage does creating the Laplacian Matrix from the Similarity Matrix bring us in spectral clustering? Why do we create it?

Here is the algorithm:

The set of eigenvalues of a adjacency matrix is the spectrum of the corresponding graph. The spectrum is a very important factor in the study of similarity between two graphs. If two graphs are similar, then the adjacency matrix of one can be viewed as a permutation operator implemented on the other, thus their eigenvalues are the same (note that two matrices have the same eigenvalues does not necessarily lead to the two graphs are similar).

So if we map each graph node to a value by assigning a function $f$ to them, the eigenvectors of the adjacency matrix $A$ can be a good option (this is also why eigenvectors are calculated in spectral clustering procedures). And it can also be written in a matrix form

$$\mathbf{f}^T \mathbf{A} \mathbf{f} = \sum_{e_{ij}} f(i) f(j)$$

If we consider the incidence matrix altogether, such graph mapping will be $f\rightarrow\nabla f$:

$$(\nabla \mathbf{f})(e_{ij}) = f(v_j)-f(v_i)$$

this maps on the edges, and $L = D - A$, is just $\nabla ^T \nabla$, will map on the graph by mapping each node again:

$$(\mathbf{L}\mathbf{f})(v_i) = \sum_{v_j\sim v_i} w_{ij}\big(f(v_i)-f(v_j)\big)$$

I will try to to give a more accessible (complementary) answer.

Spectral clustering has two steps. First you determine neighborhood edges between your feature vectors (telling you whether two such vectors are similar or not), yielding a graph.

Then you take this graph and you "embed" it, or "rearrange it" in a Euclidean space of d dimensions. Each dimension of this embedding is trying to give a solution to an optimization problem. Informally, this optimization problem is to minimize the number of neighboring graph nodes that appear on opposite sides of 0 (subject to a space filling constraint). Thus each node tends to end up next to its neighbors (as determined by the graph structure), which can be a friendlier space for K-means to work in.

If you carry the math through, it turns out that the eigenvectors of the Laplace L give a solution to a "relaxed" version of this optimization problem - that's it.