I'm trying to understand how the parameters are estimated in ARIMA modeling/Box Jenkins (BJ). Unfortunately none of the books that I have encountered describes the estimation procedure such as Log-Likelihood estimation procedure in detail. Following is the Log-Likelihood for ARMA from any standard textbooks: $$ LL(\theta)=-\frac{n}{2}\log(2\pi) - \frac{n}{2}\log(\sigma^2) - \sum\limits_{t=1}^n\frac{e_t^2}{2\sigma^2} $$

I want to learn the ARIMA/BJ estimation by doing it myself. So I used $R$ to write a code for estimating ARMA by hand. Below is what I did in $R$,

- I simulated ARMA (1,1)

- Wrote the above equation as a function

- Used the simulated data and the optim function to estimate AR and MA parameters.

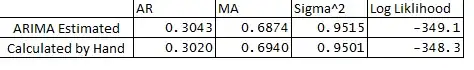

- I also ran the ARIMA in the stats package and compared the ARMA parameters from what I did by hand. Below is the comparison:

**Below are my questions:

- Why is there a slight difference between the estimated and calculated variables ?

- Does ARIMA function in R backcasts or does the estimation procedure differently than what is outlined below in my code?

- I have assigned e1 or error at observation 1 as 0, is this correct ?

- Also is there a way to estimate confidence bounds of forecasts using the hessian of the optimization ?

Thanks so much for your help as always.

Below is the code:

## Load Packages

library(stats)

library(forecast)

set.seed(456)

## Simulate Arima

y <- arima.sim(n = 250, list(ar = 0.3, ma = 0.7), mean = 5)

plot(y)

## Optimize Log-Likelihood for ARIMA

n = length(y) ## Count the number of observations

e = rep(1, n) ## Initialize e

logl <- function(mx){

g <- numeric

mx <- matrix(mx, ncol = 4)

mu <- mx[,1] ## Constant Term

sigma <- mx[,2]

rho <- mx[,3] ## AR coeff

theta <- mx[,4] ## MA coeff

e[1] = 0 ## Since e1 = 0

for (t in (2 : n)){

e[t] = y[t] - mu - rho*y[t-1] - theta*e[t-1]

}

## Maximize Log-Likelihood Function

g1 <- (-((n)/2)*log(2*pi) - ((n)/2)*log(sigma^2+0.000000001) - (1/2)*(1/(sigma^2+0.000000001))*e%*%e)

##note: multiplying Log-Likelihood by "-1" in order to maximize in the optimization

## This is done becuase Optim function in R can only minimize, "X"ing by -1 we can maximize

## also "+"ing by 0.000000001 sigma^2 to avoid divisible by 0

g <- -1 * g1

return(g)

}

## Optimize Log-Likelihood

arimopt <- optim(par=c(10,0.6,0.3,0.5), fn=logl, gr = NULL,

method = c("L-BFGS-B"),control = list(), hessian = T)

arimopt

############# Output Results###############

ar1_calculated = arimopt$par[3]

ma1_calculated = arimopt$par[4]

sigmasq_calculated = (arimopt$par[2])^2

logl_calculated = arimopt$val

ar1_calculated

ma1_calculated

sigmasq_calculated

logl_calculated

############# Estimate Using Arima###############

est <- arima(y,order=c(1,0,1))

est