I am trying to estimate the precision $\tau$ of a normal distribution with either WinBUGS or OpenBUGS:

$c \sim \text{normal}(\mu,\tau)$

$\mu \rightarrow \lambda \cdot t^{-\beta}$

$\tau \sim \text{gamma}(0.1,0.001)$

I tried using a standard approach here by setting $\tau\sim \text{gamma}(0.001,0.001)$ but WinBUGS and OpenBUGS both stop with an error message. WinBUGS basically tells me to increase the parameters for the gamma prior.

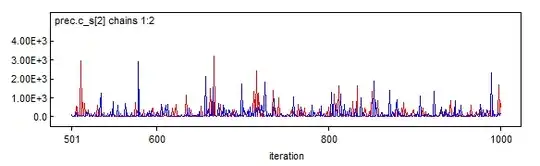

However, if I do that, the MCMC chains of $\tau$ act strangely, even if I run 200.000 iterations with thin=200:

As you can see, the chains have spikes of up to 3000and in other cases even more. I'm not very experienced but I think this shouldn't be the case. The parameter $c$ is in the range of [0;1] so I'm worried about precision estimates of above 400.

This question seems to address my issue. It says that Gibbs samplers (like WinBUGS) have problems estimating the precision of a normal if the parameters of the gamma distribution are close to zero (which is not exactly true for my case, but they are fairly small). Playing around with the parameters of the gamma distribution does help but I thought the whole purpose of using a gamma prior is to approximate a uniform which is close to zero.

I have also tried estimating the precision indirectly by estimating the standard deviation as a uniform distribution first but the result was more or less the same.

Any help is highly appreciated.