Yes, there is a formula in the specific situation given. This situation concerns $n$ independent standard Normal variables $\mathrm{X}= (X_1, \ldots, X_n)$ that model a sample of size $n.$ Standardization converts them to $Z_1, \ldots, Z_n$ where

$$Z_i = \frac{X_i - m}{s}$$

and, as usual,

$$m = \frac{1}{n}\sum X_i$$

is the sample mean and

$$s^2 = \frac{1}{n-1} \sum (X_i - m)^2$$

is the sample variance. The question concerns the distribution of

$$Y_k = \sum_{i=1}^k Z_i.$$

Algebra permits us to write

$$s Y_k = \frac{n-k}{n}\sum_{i=1}^k X_i - \frac{k}{n}\sum_{j=k+1}^n X_j = \mathrm{X} \cdot \omega$$

where $\omega = (\omega_1, \ldots, \omega_n)$ is a constant vector whose first $k$ entries are $(n-k)/n$ and last $n-k$ entries are $-k/n.$

The analysis at Distribution of scalar products of two random unit vectors in $D$ dimensions shows the distribution of $Y_k$ must be a multiple of $2U-1$ where $U$ is a Beta$((n-2)/2, (n-2)/2)$ variable. To find the value of this multiple, we need only compare variances.

The variance of $Y_k$ is readily computed as

$$\operatorname{Var}(Y_k) = ||\omega||^2 = k\left(\frac{n-k}{n}\right)^2 + (n-k)\left(\frac{-k}{n}\right)^2 = \frac{k(n-k)}{n}$$

whereas the variance of $2U-1$ is $1/(n-1).$ Thus,

$Y_k$ has the distribution of the random variable $$\frac{2U-1}{\sqrt{1/(n-1)}}\sqrt{k(n-k)/n} = (2U-1)\sqrt{\frac{k(n-k)(n-1)}{n}}$$ where $$U \sim \text{Beta}\left(\frac{n}{2}-1, \frac{n}{2}-1\right)$$ for $n \gt 2$ and $0 \le k \le n.$

Equivalently, dividing $Y_k$ by $\sqrt{k(n-k)(n-1)/n}$ produces a variable with the same distribution as $2U-1$ provided $1 \le k \lt n.$

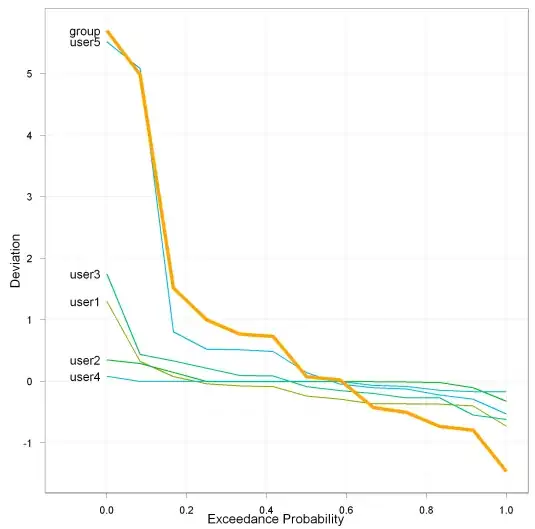

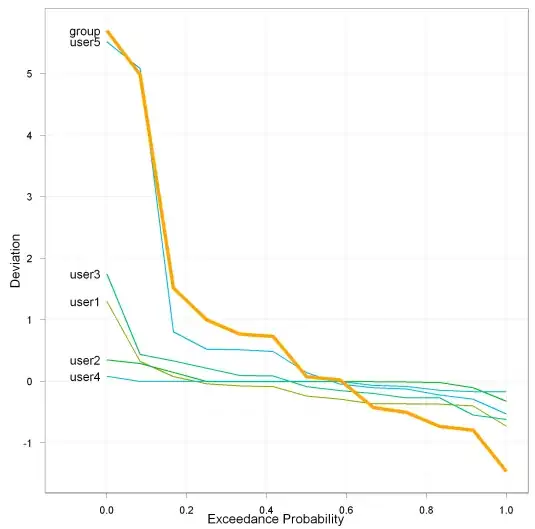

To confirm this conclusion, I simulated this construction, inspected histograms of the scaled versions of $Y_k,$ and compared those histograms to the distribution of $2U-1$ by means of a $\chi^2$ test. The figure displays the results of one simulation of $10,000$ independent datasets for $n=7.$ The headings give the values of $k$ from $1$ through $n-1.$ The red curves plot the density function of $2U-1.$

The R code that produced this simulation will create many other simulations by varying the values of n, n.sim, and the random seed in the first three lines.

n <- 7

n.sim <- 1e4

set.seed(17)

#

# Simulate samples of size `n` (as columns of `x`) and standardize them.

#

x <- matrix(rnorm(n*n.sim), n)

x <- apply(x, 2, scale)

#

# Compute sums of k=1, 2, ..., n of the standardized samples and normalize

# them to have variance 1/(n-1).

#

k <- 1:n

y <- apply(x, 2, cumsum) / sqrt(k * (n-k) / n) / sqrt(n-1)

apply(y, 1, var) * (n-1) # Check that variance looks like 1/(n-1)

apply(y, 1, max) # Verify range is within [-1, 1]

#

# Plot histograms of the normalized sums and compare them to the

# Beta(n/2-1, n/2-1) distribution supported on [-1,1].

#

breaks <- seq(-1, 1, 0.1)

d.max <- dbeta(1/2, (n-2)/2, (n-2)/2) / 2

n.rows <- floor(sqrt((n-1)))

n.cols <- ceiling((n-1) / n.rows)

par(mfrow=c(n.rows, n.cols))

invisible(lapply(1:(n-1), function(k) {

#-- Compute a histogram

h <- hist(y[k, ], breaks=breaks, plot=FALSE)

#-- Conduct a chi-squared test

freq <- diff(pbeta((breaks+1)/2, (n-2)/2, (n-2)/2)) * n.sim

threshold <- min(freq[freq >= 5])

groups <- 1:length(freq)

groups[which(freq <= threshold)] <- 0

X <- aggregate(data.frame(Count=h$counts, Frequency=freq), list(groups), sum)

a <- with(X, chisq.test(Count, p=Frequency/n.sim))

#-- Plot the histogram and the comparison distribution

plot(h, freq=FALSE, ylim=c(0, max(d.max, max(h$density))),

xlab="Value", main=k,

sub=paste0("Chi-squared(", a$parameter["df"], "): p = ",

format(a$p.value, digits=2)))

curve(dbeta((x+1)/2, (n-2)/2, (n-2)/2) / 2, add=TRUE, col="Red", lwd=2)

}))

par(mfrow=c(1,1))