@Tristan: Hope you don't mind my reworking of you answer as I am working on how to make the general point as transparent as possible.

To me, the primary insight in statistics is to conceptualize repeated observations that vary - as being generated by a probability generating model, such as Normal(mu,sigma). Early in the 1800,s the probability generating models entertained were usually just for errors of measurement with the role of parameters, such as mu and sigma and priors for them muddled. Frequentist approaches took the parameters as fixed and unknown and so the probability generating models then only involved possible observations. Bayesian approaches (with proper priors) have probability generating models for both possible unknown parameters and possible observations. These joint probability generating models comprehensively account for all of the - to put it more generally - possible unknowns (such as parameters) and knowns (such as observations). As in the link from Rubin you gave, conceptually Bayes theorem states only keep the possible unknowns that (in the simulation) actually generated possible knowns that were equal (very close) to the actual knowns (in your study).

This actually was very clearly depicted by Galton in a two stage quincunx in the late 1800,s. See figure 5 > Stigler, Stephen M. 2010. Darwin, Galton and the statistical

enlightenment. Journal of the Royal Statistical Society: Series A

173(3):469-482.

.

It is equivalent but perhaps more transparent that

posterior = prior(possible unknowns| possible knowns=knowns)

than

posterior ~ prior(possible unknowns)*p(possible knowns=knowns|possible unknowns)

Nothing much new for missing values in the former as one just adds possible unknowns for a probability model generating missing values and treats missing as just one of the possible knowns (i.e. the 3rd observation was missing).

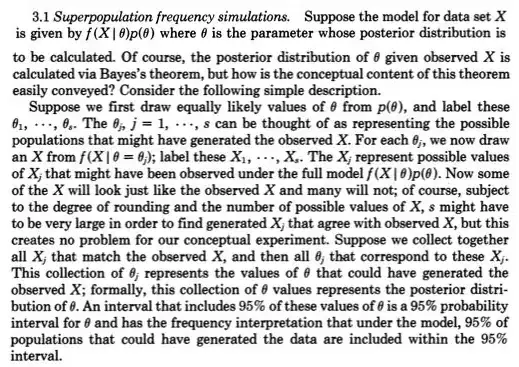

Recently, approximate Bayesian computation (ABC) has taken this constructive two-stage simulation approach seriously when p(possible knowns=knowns|possible unknowns) cannot be worked out. But even when this can be worked out and the posterior easily obtainable from MCMC sampling (or even when the posterior is directly available due to the prior being conjugate) Rubin’s point about this two-stage sampling construction enabling easier understanding, should not be overlooked.

For instance, I am sure it would have caught what @Zen did here Bayesians: slaves of the likelihood function? because one would needed to draw a possible unknown c from a prior (stage one) and then draw a possible known (data) given that c (stage 2) which would not have been a random generation as p(possible knowns|c) would not have been be a probability except for one and only one c.

From @Zen “Unfortunatelly, in general, this is not a valid description of a statistical model. The problem is that, by definition, $f_{X_i\mid C}(\,\cdot\mid c)$ must be a probability density for almost every possible value of $c$, which is, in general, clearly false.”