(This answer uses results from W.H. Greene (2003), Econometric Analysis, 5th ed. ch.21)

I will answer the following modified version, which I believe accomplishes the goals of the OP's question : "If we only estimate a logit model with one binary regressor of interest and some (dummy or continuous) control variables, can we tell whether dropping the control variables will result in a change of sign for the (coefficient of) the regressor of interest?"

Notation: Let $RA\equiv Y$ be the dependent variable, $HHS \equiv X$ the binary regressor of interest and $\mathbf Z$ a matrix of control variables. The size of the sample is $n$. Denote $n_0$ the number of zero-realizations of $X$ and $n_1$ the number of non-zero realizations, $n_0+n_1=n$. Denote $\Lambda()$ the cdf of the logistic distribution.

Let the model including the control variables (the "unrestricted" model) be

$$M_U : \begin{align} &P(Y=1\mid X,\mathbf Z)=\Lambda(X, \mathbf Z,b,\mathbf c)\\ &P(Y=0\mid X,\mathbf Z)=1-\Lambda(X, \mathbf Z,b,\mathbf c) \end{align}$$

where $b$ is the coefficient on the regressor of interest.

Let the model including only the regressor of interest (the "restricted" model) be

$$M_R : \begin{align} &P(Y=1\mid X)=\Lambda(X, \beta)\\ &P(Y=0\mid X)=1-\Lambda(X,\beta) \end{align}$$

STEP 1

Consider the unrestricted model. The first-derivative of the log-likelihood w.r.t to $b$ and the condition for a maximum is

$$\frac {\partial \ln L_U}{\partial b}= \sum_{i=1}^n\left[(y_i-\Lambda_i(x_i, \mathbf z_i,b,\mathbf c)\right]x_i=0 \Rightarrow b^*: \sum_{i=1}^ny_ix_i=\sum_{i=1}^n\Lambda_i(x_i, \mathbf z_i,b^*,\mathbf c^*)x_i \;[1]$$

The analogous relations for the restricted model is

$$\frac {\partial \ln L_R}{\partial \beta}= \sum_{i=1}^n\left[(y_i-\Lambda_i(x_i,\beta)\right]x_i=0 \Rightarrow \beta^*: \sum_{i=1}^ny_ix_i=\sum_{i=1}^n\Lambda_i(x_i, \beta^*)x_i \qquad[2]$$

We have

$$\Lambda_i(X,\beta^*) = \frac {1}{1+e^{-x_i\beta^*}}$$

and since $X$ is a zero/one binary variable relation $[2]$ can be written

$$\beta^*: \sum_{i=1}^ny_ix_i=\frac {n_1}{1+e^{-\beta^*}} \qquad[2a]$$

Combining $[1]$ and $[2a]$ and using again the fact that $X$ is binary we obtain the following equality relation between the estimated coefficients of the two models:

$$\frac {n_1}{1+e^{-\beta^*}} = \sum_{i=1}^n\Lambda_i(x_i, \mathbf z_i,b^*,\mathbf c^*)x_i $$

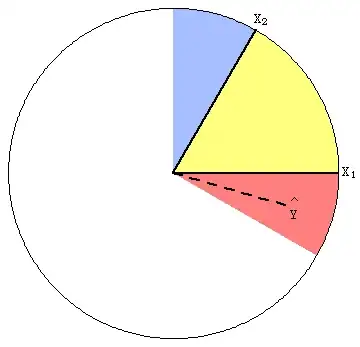

$$\Rightarrow \frac {1}{1+e^{-\beta^*}} = \frac {1}{n_1}\sum_{x_i=1}\Lambda_i(x_i=1, \mathbf z_i,b^*,\mathbf c^*) \qquad [3]$$

$$\Rightarrow \hat P_R(Y=1\mid X=1) = \hat {\bar P_U}(Y=1\mid X=1,\mathbf Z) \qquad [3a]$$

or in words, that the estimated probability from the restricted model will equal the restricted average estimated probability from the model that includes the control variables.

STEP 2

For a sole binary regressor in a logistic regression, its marginal effect $m_R(X)$ is

$$ \hat m_R(X)= \hat P_R(Y=1\mid X=1) - \hat P_R(Y=1\mid X=0)$$

$$ \Rightarrow \hat m_R(X) = \frac {1}{1+e^{-\beta^*}} - \frac 12$$

and using $[3]$

$$ \hat m_R(X) = \frac {1}{n_1}\sum_{x_i=1}\Lambda_i(x_i=1, \mathbf z_i,b^*,\mathbf c^*) - \frac 12 \qquad [4]$$

For the unrestricted model that includes the control variables we have

$$ \hat m_U(X)= \hat P_U(Y=1\mid X=1, \bar {\mathbf z}) - \hat P_U(Y=1\mid X=0, \bar {\mathbf z})$$

$$\Rightarrow \hat m_U(X) = \frac {1}{1+e^{-b^*-\bar {\mathbf z}'\mathbf c^*}} - \frac {1}{1+e^{-\bar {\mathbf z}'\mathbf c^*}} \qquad [5]$$

where $\bar {\mathbf z}$ contains the sample means of the control variables.

It is easy to see that the marginal effect of $X$ has the same sign as its estimated coefficient. Since we have expressed the marginal effect of $X$ from both models in terms of the estimated coefficients from the unrestricted model, we can estimated only the latter, and then calculate the above two expressions ($[4]$ and $[5]$) which will tell us whether we will observe a sign reversal for the coefficient of $X$ or not, without the need to estimate the restricted model.