Others have suggested standardized coefficients as a solution, which is one good approach, but they did not explain why you are seeing what you are seeing.

Remember that the interpretation of the slope is: the average amount of increase in y for a 1 unit increase in x (holding the other variables constant). This depends on the units for both x and y.

Imagine a plant that on average grows 1 foot (additional height) each year (on average). So if you measure a bunch of plants at different times and fit a regression model with y being the height in feet and x is time in years then you will see a coefficient of about 1.

But what if we measure time in months instead of years (but keep height in feet), then the slope will be about 1/12. On the other hand if we keep x measured in years, but measure height in inches instead of feet then the slope will be about 12. Go even further and measure time (x) in seconds and height (y) in miles and you will see a slope that is extremely small (about 1/(365*24*60*60*5280)). If you measure time in centuries and height in millimeters then the slope will be huge.

But in all of these cases (assuming no rounding errors) the relationship between the 2 variables will be exactly the same, things like R-squared and the significance level will not change (the units all cancel out in the calculations).

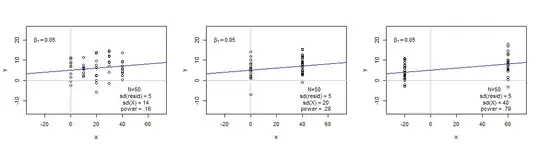

The reason using standardized coefficients helps is because it changes the units to standard deviations, puts them on a more comparable scale. Another approach is to just change your units to something that may be more meaningful or easier to understand. Or just accept that the scales/units are such that you would expect a small coefficient.