Much has been written on the ICC and Kappa, but there seems to be disagreement on the best measures to consider.

My purpose is to identify some measure which shows whether there was agreement between respondents an interviewee administered questionnaire. 17 people gave ratings of 0-5 to a defined list of items, rating them according to importance (NOT ranking).

I am not interested in whether the 17 participants all rated exactly the same, but only whether there is agreement that it should be rated high or not.

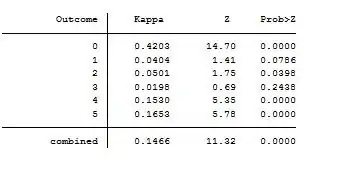

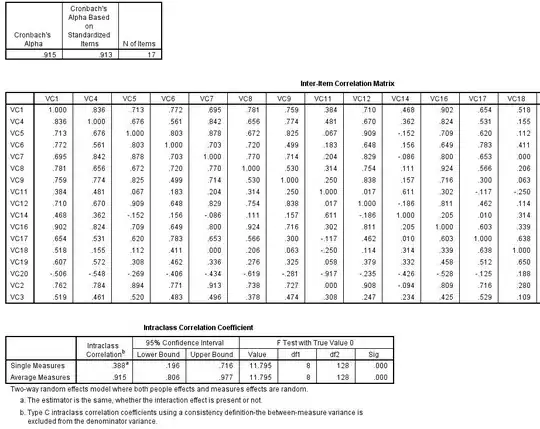

Following suggestions here, I have used the ICC and also Kappa but different results were produced as follows:

Also I note that given the very small sample, the validity of the ICC could be questionable due to the use of the f test see this question

What are your suggestions, and way forward to comment on this