Say I have a data set composed of $N$ objects. Each object has a given number of measured values attached to it (three in this case):

$x_1[a_1, b_1, c_1], x_2[a_2, b_2, c_2], ..., x_N[a_N, b_N, c_N]$

meaning I have measured the properties $[a_i, b_i, c_i]$ for each $x$ object. The measurement space is thus the space determined by the variables $[a, b, c]$, where each $x$ object is represented by a point in it.

More graphically: I have $N$ objects scattered in a 3D space.

What I need is a way to determine the probability (or likelihood?, is there a difference?) of a new object $y[a_y, b_y, c_y]$ of belonging to this cloud of objects This probability calculated for any $x$ object will of course be very close to 1.

Is this feasible?

Add 1

To address AdamO's question: the object $y$ belongs to a set composed of $M$ mixed objects (with $M$ > $N$). This means that some objects in this set will have a high probability of belonging to the first data set ($N$) and others will have a lower probability. I'm actually interested in these low probability objects.

I can also come up with up to 3 more data sets of $N1$, $N2$, and $N3$ objects, all of them having the same global properties as those in the $N$ data set. Ie: an object in $M$ that has a low probability in $N$ will also have low probabilities when compared with $N1$, $N2$ and $N3$ (and viceversa: objects in $M$ of high probabilities of belonging to $N$ will also display high probabilities of belonging to $N1$, $N2$ and $N3$).

Add 2

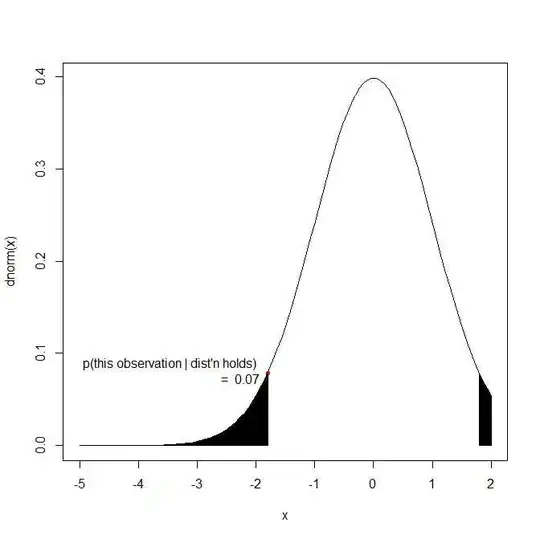

According to the answer given in the question Interpretation/use of kernel density I can not derive the probability of a new object belonging to the set that generated the $kde/pdf$ (assuming I would even be able to resolve the equation for a non-unimodal $pdf$) because I have to make the a priori assumption that that new object was generated by the same process that generated the data set from which I obtained the $kde$. Could someone confirm this please?