I have a question regarding the interpretation of the different matrices produced by singular value decomposition.

Suppose a mxn matrix $A$ containing n images of m pixels. So each column of this matrix when reshaped is an image. The images are actually wavelets transformations but that bears no relevance to the question. Furthermore, the first k columns of $A$ are images of dogs and the remaining columns are images of cats. So I have something like this:

wavelet_dc = np.hstack((wavelet_dogs, wavelet_cats))

U, S, V = svd(wavelet_dc, full_matrices=False)

What is the interpretation for U, S and V? I know we can build the original image based on a sum such as $A = \sum_{i} u_{i}s_{i}v^{T}_{i}$ in which $u_{i}$ and $v_{i}$ are column vectors of U and V, respectively. I also know that $S$ determines the most important modes of the original matrix and that the columns of U are the features associated to those modes. However, what role does V play in this matrix? I'd like to know how to think about V in practical applications (that is, more than as a rotation in the geometric interpretation of SVD)

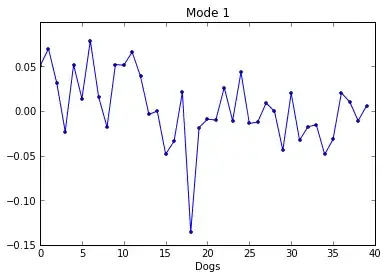

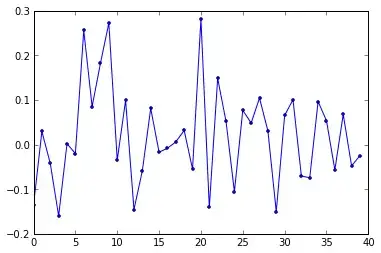

Furthermore, suppose I plot V in the following way:

plot(V[0:40, 0], 'o-', markersize=3)

Since V is a nxn matrix, I don't know how to interpret it, but I think this code is actually taking the first 40 rows and the first column of V. As I understand V as:

$$V = \begin{pmatrix}v_{1}^{T} \\v_{2}^{T} \\\vdots \\v_{n}^{T}\end{pmatrix}$$

I think the plot is referring to the first component of the 40 vectors $v_{1} ... v_{40}$ but what does it mean? In the Coursera class "Computational Methods for Data Analysis", this is described as the dogs for the first mode and V[0:40, 1] would be the dogs for the second mode, and so on. You can see the notes here, figure 159, page 388. This is assuming of course, that at least the first 40 columns of $A$ contained images of dogs. In the class, they also plot V[80:120, 0] because their matrix $A$ actually has the first 80 columns representing images of dogs and the last 80 columns representing images of cats, so V[80:120, 0] is plotting the first 40 images of cats.

UPDATE:

According to the video lectures, W8_L21_P3-Features: https://class.coursera.org/compmethods-002/lecture/76, they seem to interpret V[0:40, x] as the projection of the first 40 dogs onto the modes. Well, V has dimensions nxn which is the number of images, however, I wouldn't interpret it as "dogs". In any case, I think such projections shouldn't be V[0:40, 0] but V[0, 0:40] because these are the first 40 components of the vector $v_{1}^{T}$ that multiplies the first mode $s_{0}$. However, please let me know if I made a mistake.