Wikipedia examples say that a coin is unfair if it generates a sequence 1111111. A high alternation rate, such as 1010101010, would be similarly unfair.

What is a fallacy to think that a coin is unfair on the grounds that it is equally improbable to see any sequence?

I mean that it is normally "resolved" by stating the fact that P(11111111) = P(00000000) = P(01010101) = P(10101010) = P (010101000) = P(any other sequence). This explains that the coin is fair. But, I interpret it as there cannot be any fair coin just because of identity of all probabilities and because P(all ones) = P(any sequence) etc, all being highly improbably.

Now, we have that fair coin cannot be fair. Where is the fallacy and how to apply the p-test properly?

edit The hypothesis is that the coin is fair and statistic is the probability of occurring the sequence. I can compute the probability of occurred sequence from the hypothesis. For any sufficiently long sequence, the theoretical probability is too low and, therefore, fairness must be rejected. Where is the fallacy?

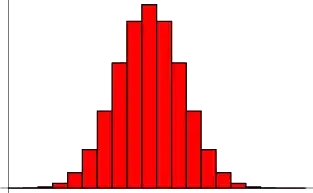

edit2 Why nobody can simply say that the pitfall is pointed out in the first Wikipedia example: p-criteria does not take the sample size into account? I can even trivialize the problem. Forget the series. Let's evaluate the probability of picking a single item38 under assumption of uniform 0-100 distribution. Obviously, it is 1%, which is sufficiently low to be picked by chance. But, statistics shows that item appears in 100% of the cases (1 time per 1 experiment). This obviously cannot be by chance, according to p-level test, yet, sample size is also insufficient. So, p-test must be complemented by sample size analysis. It is a fallacy to forget this. Right?

A related question: which distribution will have the probability of picking item38 if I draw multiple samples? How do I take the integral of "extreme cases"?