To compare my method with others I'm trying to compute its AUC but I got a bit confused on how to do this for my case. My method uses a model that classifies an image as class A or B, after that if the image was classified as positive or as A, it goes to a segmentation model that makes a mask showing where class A is located in the image. I need to compute the AUC for the pixel predictions.

So far what I've done is, If the image is discarded by the first model I use its full image prediction for all pixels in that image, otherwise I use the second model's prediction. But the resulting curve was a bit weird and I'm not sure this is the right approach. Is there a better way to do this? Any help is appreciated!

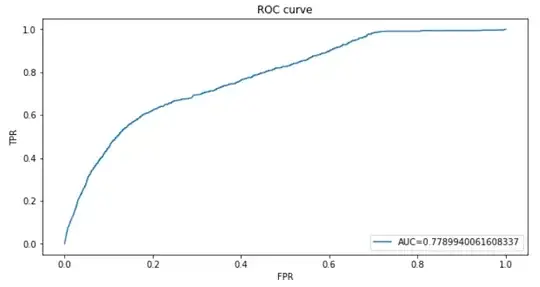

Here's how my ROC curve looks like:

It's weird that in the confusion matrix I made it shows a TPR of 0.91 and a FPR of 0.92 and it does not show that on the curve. I believe it might have a weird behavior because when computing the AUC it's used the same threshold for two different methods, since probabilities come from two models.