I'm currently trying to understand the meaning of negative entropy. With the given equation: $$ H_{cont}\ (X) = -\int_{-\infty}^{+\infty} p(x) \centerdot ln(p(x)) \ dx $$

Here, the The_Sympathizer explains the idea of differential entropy well and I “begin” to understand when it can be negative (Still in the learning process ;) ). For example I have a gaussian distributed variable X, $\mu=0$ (not so important to mention it in this case, I guess, but nvm) and $\sigma=3$. We integrate the equation above, knowing the PDF p(x) of a gaussian variable and “simplify” it to: $$ H_{cont}\ (X) = ln(\sigma \sqrt{2 \pi e}) $$ So we’ll get an entropy of $H_{\sigma=3}\ (X) = 2.52 nats$ Now we’ll look at a more narrow distribution with $\sigma=0.03$. The entropy for this example will be: $H_{\sigma=0.03}\ (X) = -2.09 nats$. This distribution is “really sharp”, there’s not much “variation” in it -> so the second distribution will not provide so “much information” compared to the first distribution (because the results in the second distribution isn’t really surprising; less uncertainty; I’ll gain not so much information by looking only at this one variable X). So far, so right? But what does -2.09 nats really mean? Can I really make any conclusion if compare it to another, smaller deviation? Can I drop columns with really negative differential entropy cause they don’t provide so much information?(I guess it's not so simple)

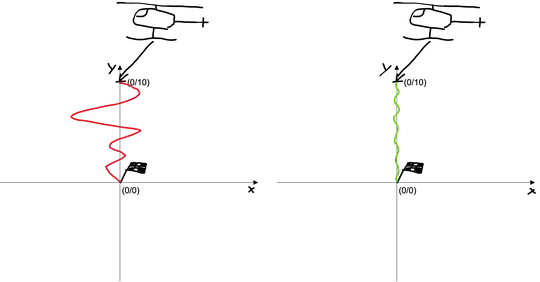

So as a mechanical engineer, I need a more practical example. (I started to learn more about reinforcement learning) I’m not sure if this example is really applicable on differential entropy but I try it: I have two pilots who are flying a helicopter. I’m not accounting any environmental influences and it’s only a 2D example. The Target is to fly a straight line and land the helicopter at the coordinates (0,0). Starting point of the helicopter is (0,10), i.e. 10 meters above the surface.

Looking at the picture I draw. Pilot A on the left isn’t the best one according to his flying skills. Pilot B is a good pilot and hugging the y-axis. Applying the idea of a “narrow or wider distribution” and comparing Pilot A and B: The flight profile of Pilot A has many different possible “states”(a wider density function) and will lead to a bigger differential entropy H(x) and for Pilot B it’s probably small or maybe a negative entropy, right? So comparing this two entropies, what can I tell? Can I only say Pilot B can fly straight down a line without many adjustings and Pilot A needs much more practice? And the best pilot will have $-\infty$ entropy? Is it my decision to say at which entropy threshold a pilot is good enough for my task? Does it really make sense to look only at one variable in the continuous space? (is it better to look for Mutual Information, crossentropy, transferentropy etc.?) There are probably much better measurements to rate the performance of the pilot skills but I want to understand the usage of the differential entropy, maybe you guys have a better example? I hope my question is understandable...

thank you!