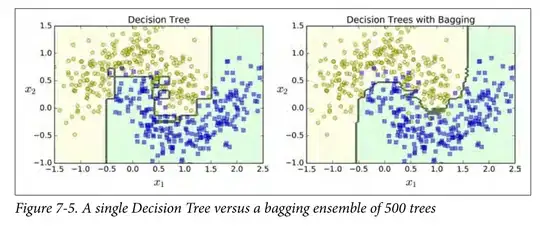

I was told that decision boundaries of RandomForests can be non-orthogonal. See Figure 7-5 in Geron's book Hands-On Machine Learning with Scikit-Learn & TensorFlow p.g. 187 edition 1. This is not obvious to me (I think the decision boundaries must be orthogonal at small scales but can approximate non-orthogonal boundaries on a global scale). I was told that one could see this by simple averaging, but I cannot seem to make up an example consisting of a few decision trees and a few data points in 2D with which I can see a non-orthogonal decision boundary.

Could someone please show me how a RandomForest can have a non-orthogonal decision boundary with a few (3-4) datapoints and a few (3-4) trees ?