This is an excellent question, and it shows that you are thinking about important foundational matters in simple probability problems.

The convergence outcome follows from the condition of exchangeability. If the coin is tossed in a manner that is consistent from flip-to-flip, then one might reasonably assume that the resulting sequence of coin tosses is exchangeable, meaning that the probability of any particular sequence of outcomes does not depend on the order those outcomes occur in. For example, the condition of exhangeability would say that the outcome $H \cdot H \cdot T \cdot T \cdot H \cdot T$ has the same probability as the outcome $H \cdot H \cdot H \cdot T \cdot T \cdot T$, and exchangeability of the sequence would mean that this is true for strings of any length which are permutations of each other. The assumption of exchangeability is the operational assumption that reflects the idea of "repeated trials" of an experiment --- it captures the idea that nothing is changing from trial-to-trial, such that sets of outcomes which are permutations of one another should have the same probability.

Now, if this assumption holds then the sequence of outcomes will be IID (conditional on the underlying distribution) with fixed probability for heads/tails which applies to all the flips. (This is due to a famous mathematical result called de Finetti's representation theorem; see related questions here and here.) The strong law of large numbers then kicks in to give you the convergence result ---i.e., the sample proportion of heads/tails converges to the (fixed) probability of heads/tails with probability one.

What if exchangability doesn't hold? Can there be a lack of convergence? Although there are also weaker assumptions that can allow similar convergence results, if the underlying assumption of exchangeability does not hold ---i.e., if the probability of a sequence of coin-toss outcomes depends on their order--- then it is possible to get a situation where there is no convergence.

As an example of the latter, suppose that you have a way of tossing a coin that can bias it to one side or the other ---e.g., you start with a certain face of the coin upwards and you flip it in a way that gives a small and consistent number of rotations before landing on a soft surface (where it doesn't bounce). Suppose that this method is sufficiently effective that you can bias the coin 70-30 in favour of one side. (For reasons why it is difficult to bias a coin-flip in practice, see e.g., Gelman and Nolan 2002 and Diaconis, Holmes and Montgomery 2007.) Now, suppose you were to execute a sequence of coin tosses in such a way that you start off biasing your tosses towards heads, but each time the sample proportion of heads exceeds 60% you change to bias towards tails, and each time the sample proportion of tails exceeds 60% you change to bias towards heads. If you were to execute this method then you would obtain a sample proportion that "oscillates" endlessly between about 40-60% heads without ever converging to a fixed value. In this instance you can see that the assumption of exchangeability does not hold, since the order of outcomes gives information on your present flipping-method (which therefore affects the probability of a subsequent outcome).

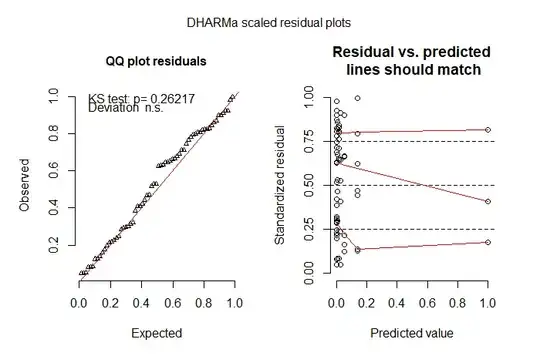

Illustrating non-convergence for the biased-flipping mechanism: We can implement a computational simulation of the above flipping mechanism using the R code below. Here we create a function oscillating.flips that can implement that method for a given biasing probability, switching probability and starting side.

oscillating.flips <- function(n, big.prob, switch.prob, start.head = TRUE) {

#Set vector of flip outcomes and sample proportions

FLIPS <- rep('', n)

PROPS <- rep(NA, n)

#Set starting values

VALS <- c('H', 'T')

HEAD <- start.head

#Execute the coin flips

for (k in 1:n) {

#Set probability and perform the coin flip

PROB <- c(big.prob, 1-big.prob)

if (!HEAD) { PROB <- rev(PROB) }

FLIPS[k] <- sample(VALS, size = 1, prob = PROB)

#Update sample proportion and execute switch (if triggered)

if (k == 1) {

PROPS[k] <- 1*(FLIPS[k] == 'H')

} else {

PROPS[k] <- ((k-1)*PROPS[k-1] + (FLIPS[k] == 'H'))/k }

if (PROPS[k] > switch.prob) { HEAD <- FALSE }

if (PROPS[k] < 1-switch.prob) { HEAD <- TRUE } }

#Return the flips

data.frame(flip = 1:n, outcome = FLIPS, head.props = PROPS) }

We implement this function using the mechanism described above (70% weighting towards biased side, switching probability of 60%, and starting biased to heads) and we get $n=10^6$ simulated coin-flips for the problem, with a running output of the sample proportion of heads. We plot these sample proportions against the number of flips, with the latter shown on a logarithmic scale. As you can see from the plot, the sample proportion does not converge to any fixed value --- instead it oscillates between the switching probabilities as expected.

#Generate coin-flips

set.seed(187826487)

FLIPS <- oscillating.flips(10^6, big.prob = 0.7, switch.prob = 0.6, start.head = TRUE)

#Plot the resulting sample proportion of heads

library(ggplot2)

THEME <- theme(plot.title = element_text(hjust = 0.5, size = 14, face = 'bold'),

plot.subtitle = element_text(hjust = 0.5, face = 'bold'))

FIGURE <- ggplot(aes(x = flip, y = head.props), data = FLIPS) +

geom_point() +

geom_hline(yintercept = 0.5, linetype = 'dashed', colour = 'red') +

scale_x_log10(breaks = scales::trans_breaks("log10", function(x) 10^x),

labels = scales::trans_format("log10", scales::math_format(10^.x))) +

scale_y_continuous(limits = c(0, 1)) +

THEME + ggtitle('Example of Biased Coin-Flipping Mechanism') +

labs(subtitle = '(Proportion of heads does not converge!) \n') +

xlab('Number of Flips') + ylab('Sample Proportion of Heads')

FIGURE