Performing cluster analysis, I have a reference for the results and the results of two methods A and B. I am able to calculate fitness metrics (like adjusted mutual information) between the reference and either result.

Please note, that clustering results are not to be confused with classification results, such that in classification 1,1,1,2,2,3,3 would be a different results than 1,1,1,3,3,2,2, but in clustering those would be identical results. Also, there is no constraint, that the methods would return the same number compared with each other or the reference.

I found out that method A is better than method B, but I want to know whether it is statistically significantly better using a significance test (G-test or Chi-squared test). I have an idea, but are not sure whether this is valid:

I consider the reference and the results of the methods to be three variables (R, A, B), and build a three-way contingency table with each cell frequency being $n_{r,a,b}$. I plan to make a chi-squared test, for which I need an expected frequency for each cell

$$ E_{r,a,b} = \underbrace{ \frac{ \sum_{b=1}^{N_B} n_{r,a,b} }{N} }_{\text{marginal probability P(R,A)}} \cdot \underbrace{ \frac{ \sum_{a=1}^{N_A} n_{r,a,b} }{N} }_{\text{marginal probability P(R,B)}} \cdot N \\ N = \sum_{r=1}^{N_R}\sum_{a=1}^{N_A}\sum_{b=1}^{N_B} n_{r,a,b} $$

Then I calculate

$$ G=2 \cdot \sum_{r=1}^{N_R}\sum_{a=1}^{N_A}\sum_{b=1}^{N_B} n_{r,a,b} \cdot \ln \left( \frac{n_{r,a,b}}{E_{r,a,b}} \right), $$

and use it in a chi-squared test with $(N_R-1) \cdot (N_A-1) \cdot (N_B-1)$ degrees of freedom.

Is my approach correct?

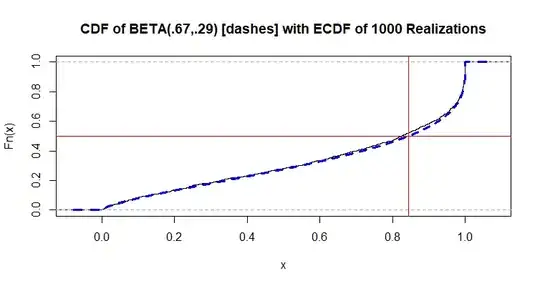

To visualize the basic problem, I drew a beautiful graphic, where the y-axis is the goodness of fit:

We can see, that if we would vary the situation slightly (e.g. creating different versions of the dataset, by adding Gaussian noise), we might get this image. We might making a hypothesis test with the Null-hypothesis that the goodness of the methods A and B are identical. We would see that the mean of the goodness of method B is far away from the mean of the goodness of method A, taking the variance of the goodness of method A into account.

However, this is not without issue: If we want to know if method A is significantly better, don't we have to take into account how good the methods are, in other words their absolute goodness? If we consider the difference to R (what I asked above), the we need look at the two "lines" at the right. And there we see that "A compared to R" is not that much better than "B compared to R". In contrast to the idea of the last paragraph, where we compared A to B directly (not considering the difference to R).

Btw, I think this is different than conditional independence, because there, we divide by $\sum_{a=1}^{N_A}\sum_{b=1}^{N_B} n_{r,a,b}$ not by $N$, during the calculation of $E_{r,a,b}$. My approach is equal to calculating the expected frequencies as done in the conditional independence test, then weighting the respective expected frequencies by the marginal probability of R (receiving the expected probabilities) and multiplying by N to get the expected frequencies.